The ARC-AGI (Abstract and Reasoning Corpus for Artificial General Intelligence) benchmark, introduced by François Chollet in 2019 as an evaluation to track progress towards the much-hyped and highly coveted artificial general intelligence, catapulted to the spotlight in December last year. It was announced then that o3 in high compute (low efficiency) mode surpassed the 85% accuracy baseline set by humans who had solved the benchmark's evaluation set. When evaluated in low compute (high efficiency) mode, o3 was nearly 76% accurate, which is still quite impressive.

In the same blog post detailing o3's feat, Chollet stated that the benchmark was becoming saturated as, in addition to o3's high scores, other highly efficient solutions capable of achieving 81% accuracy on the fully private evaluation set were emerging, including those brute-forcing their way to a high score. Days after the o3 breakthrough, the ARC Prize Foundation was officially established with the mission of scaling what had been a joint effort by the three co-founders into a fully-fledged non-profit organization.

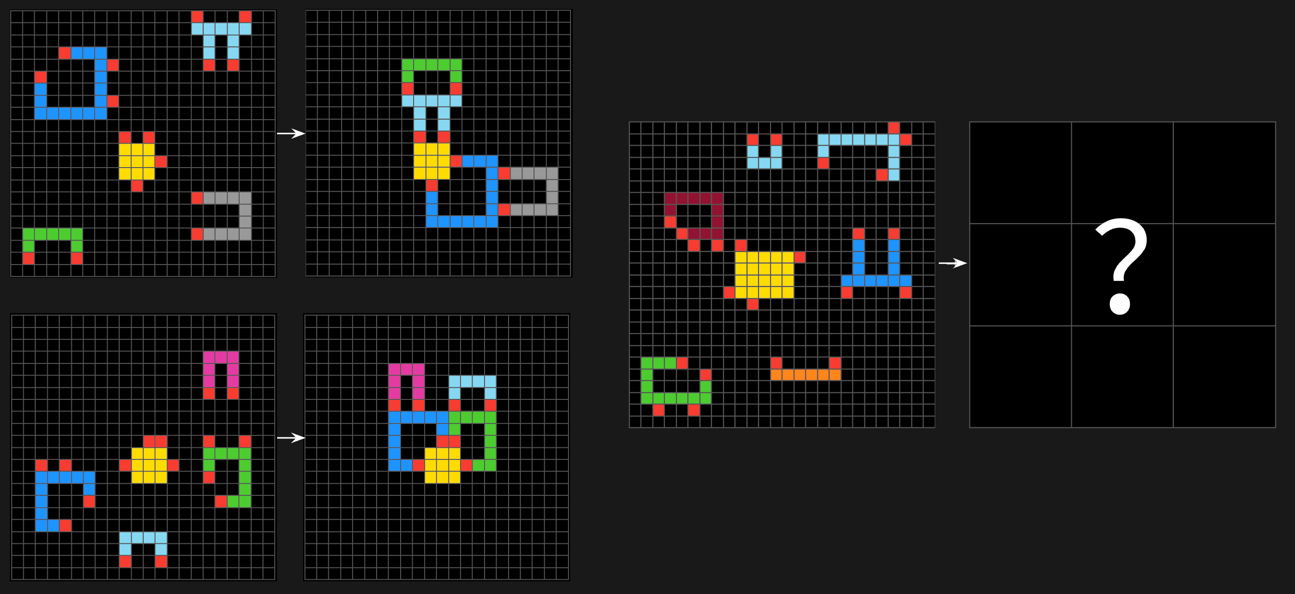

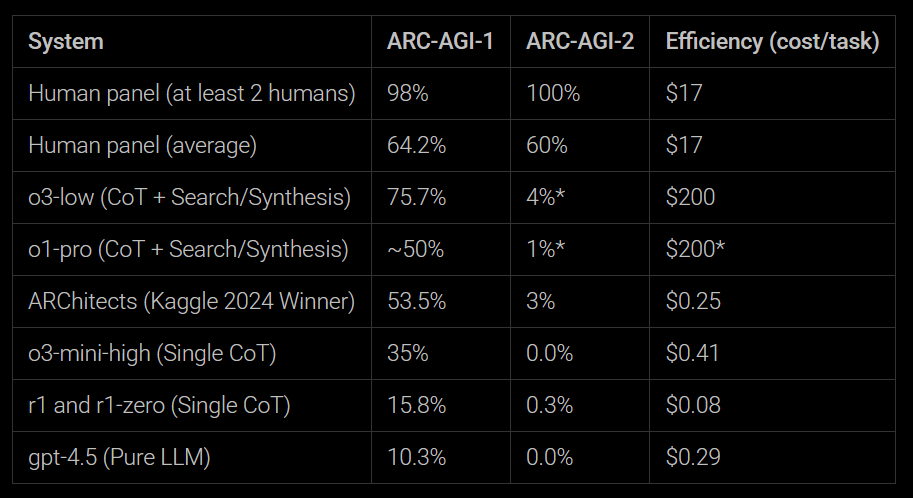

A few days ago, the ARC Prize Foundation launched ARC-AGI-2, another visual reasoning benchmark evaluation that preserves its predecessor's spirit in that its tasks are relatively easy (solvable by humans with no prior training in under 2 attempts). OpenAI's GPT-4.5 and o3-mini score 0%, DeepSeek's r1 and r1-zero score 0.3%, and OpenAI's o1-pro and 03-low are estimated to score 1% and 4%, respectively.

Since the original ARC-AGI scores emerged, the benchmark has been widely criticized for not accounting for or setting a limit on the compute budget. Currently, the price per task for o3's high efficiency mode is estimated at around $200, using o1-pro pricing for reference. Since the low efficiency mode is reported as using approximately 172x more resources, this places the low efficiency mode cost at an eye-watering $3400 per task. Although the official results are pending, o3 in high compute mode is not expected to exceed 20% accuracy on the ARC-AGI-2.

Considering this, the new leaderboard will consider cost efficiency, rendering the new human baselines as follows: High-scoring humans have achieved 100% accuracy in the evaluation, with a calculated cost of $17 per task. Similarly, the average human score is 60% for the same cost efficiency. These costs were calculated from an actual $115-150 show-up fee for a human panel, with the addition of a $5/task solve incentive. The Foundation believes the human cost efficiency is in the $2-$5 range, but reports $17 to match their data collection methodology.

Interestingly, the new parameters also allow one to draw the following conclusion: while human performance across both ARC-AGI benchmarks has remained more or less stable (plus-minus 5%) and costs have not increased, model performance has dramatically sunk across the board. This nicely exemplifies what critics of the "reasoning" paradigm mean when they say that newer models lack true generalization capabilities.

The tasks across both benchmarks are similar enough that most humans can tackle them with little to no preparation. In contrast, models can at best generalize within a particular type of task, which is why, unlike what happens with humans, model performance on the ARC-AGI-1 fails to translate entirely (or at all) to the ARC-AGI-2.

The ARC Prize 2025 is live!

In parallel with the official ARC-AGI-2 release, the ARC Prize Foundation has announced the start of the ARC Prize 2025. The competition, boasting a $1 million prize pool, will be hosted on Kaggle from March 26 to November 3. A $700,000 Grand Prize will go to an open-source solution that achieves an 85% score or greater in line with all the other submission requirements. Moreover, as in the 2024 competition, a $50,000 Paper Prize will be allocated to the submission that shows significant conceptual progress without necessarily achieving a top score, while a $75,000 prize is set aside for other high-scoring solutions. In addition, the ARC Prize Foundation has yet to announce a further $175,000 in prices.

Comments