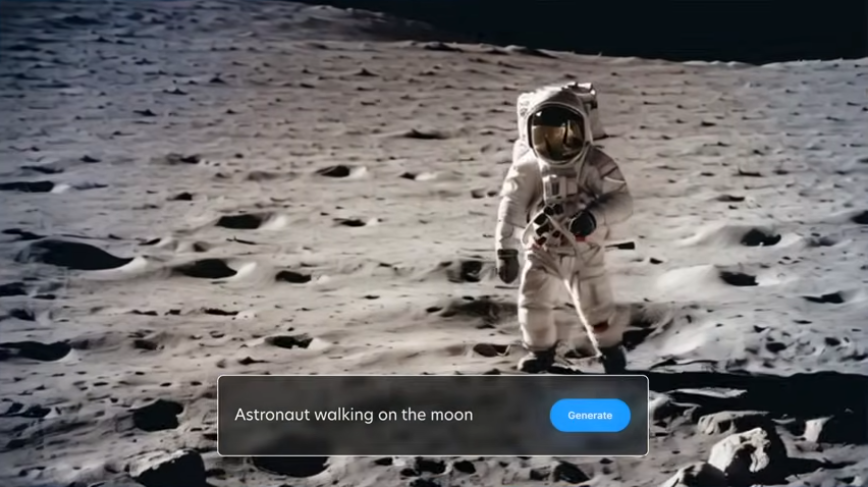

Starting December 20, Stability AI has added its video generation foundational model, Stable Video Diffusion, to the model lineup available via the Stability AI Developer Platform API, ensuring seamless integration of the model's video generation capabilities in any AI-powered application. This move is one of several actions Stability AI has taken to increase access to the model since it is also available for local hosting via the Stability AI Membership. Stable Video Diffusion will soon be accessible through an upcoming web interface (there is currently a waitlist option in the Stability AI contact form for those interested in trying out the web interface ASAP).

Stable Video Diffusion can generate 2 seconds of 24fps video created from 25 model-generated frames and 24 frames of FILM interpolation in around 40 seconds, supporting multiple layouts and resolutions, including 1024x576, 768x768, and 576x1024. It also boasts various features, including watermarking, JPEG and PNG support, seed-based control to allow developers to choose from random and repeatable generation, and MP4 as the video output format to maximize compatibility with multiple platforms and applications.

The Stability AI Developer Platform features API access to several image-generation models, including the company's flagship Stable Diffusion XL, thus ensuring that its customers' multi-modal needs will be fully covered. Further information on the available models and pricing can be found on the Stability AI Platform website.

Comments