Every weekend, we bring together a selection of the week's most relevant headlines on AI. Each weekly selection covers startups, market trends, regulation, debates, technology, and other trending topics in the industry.

India is launching an AI advisory following the Google Gemini incident

India's Ministry of Electronics and IT issued an advisory requiring that significant tech firms obtain government permission to launch new models on Friday. The advisory also urges firms to guarantee that their services and products do not foster discrimination, bias, or threats to the integrity of India's upcoming elections. It also asks that AI-generated outputs be appropriately labeled to warn users about their possible and inherent flaws. The advisory follows an incident involving Google Gemini claiming that some unidentified experts consider that some policies implemented by Indian PM Narendra Modi's government are fascist.

The Ministry has admitted that the advisory is not legally binding, but Chandrasekhar has stated that it signals the future of regulation. If this is true, it marks a reversal from India's previous strategic decision not to interfere with the growth of AI. Many in the tech industry have expressed their discontent at the new advisory, with little regard for the fact that both the Gemini incident and the advisory issue are unfolding against a sociopolitical background in which 8 of the ten most populous nations, representing almost half of the world's population, are holding federal elections this year.

Researchers are looking into optical computing as a next-generation foundation for AI

It is now well-known that the current hardware serving as a foundation for neural networks is becoming increasingly power-hungry. Because of this, some researchers are looking into optical computing as a faster and safer alternative to perform the operations that underlie many complex computing tasks. A research group recently designed microchips that perform vector-matrix multiplication, the operations essential to building neural networks. Unlike conventional microchips, which perform calculations one line at a time, the new devices performed the whole operation at once, thus eliminating the need for intermediate-stage data storage. This feature could make the device less vulnerable to hacking. Still, scaling this kind of device has proven difficult. In their recently published paper, the researchers design and demonstrate a vector-matrix product for a 2 × 2 matrix and a 3 × 3 matrix. Using a two-dimensional design method that bypasses the traditionally required 3D simulations, they could design (but not demonstrate) a 10 × 10 matrix.

Amazon Bedrock announced the incorporation of the Claude 3 family to its model lineup

Access to Claude 3 Sonnet started March 4, with Opus and Haiku expected to join the lineup in the coming weeks. Amazon Bedrock's announcement lauded Claude 3 Opus' state-of-the-art performance. It also highlighted that many of Bedrock's customers, including Pfizer, KT, Perplexity AI, and The PGA Tour, are already building their generative AI applications using Claude. The announcement also places the addition of the Claude 3 models to Bedrock's selection in the context of last year's collaboration agreement between Anthropic and Amazon, according to which Amazon would invest up to $4 billion in Anthropic. According to Amazon, including the Claude 3 models is also a continuation of its commitment to expanding its offerings in all the layers of the generative AI stack: infrastructure, models, and user-facing applications.

Sign up for Data Phoenix

Data Phoenix is your best friend in learning and growing in the data world! We publish digest, organize events and help expand the frontiers of your knowledge in ML, CV, NLP, and other aspects of AI.

No spam. Unsubscribe anytime.

DataCebo is an MIT spinout helping organizations create synthetic data for applications like model training and testing software

DataCebo offers a system called Synthetic Data Vault (SDV) that helps organizations create synthetic data that matches the statistical properties of their non-synthetic data. SDV has been downloaded over a million times and used by over 10,000 data scientists to generate tabular synthetic data. SDV has become so popular that the data science platform Kaggle in 2021, where data scientists had to build a solution that predicted a company's non-synthetic data outcomes using synthetic data. Moreover, since its creation in 2020, DataCebo has developed SDV features directed at larger organizations. Its flight simulator enables airlines to prepare for rare weather events for which the historical data is insufficient. Another user has used SDV to synthesize medical records to predict outcomes for cystic fibrosis patients. More recently, DataCebo released the SDMetrics library, which lets users evaluate the realism of their generated data. Another tool, SDGym, enables users to compare the performance of different models.

Multiverse Computing secured 25 million EUR in an oversubscribed Series A funding round

Columbus Venture Partners led the oversubscribed Series A, with the participation of Quantonation Ventures and new investors, including the European Innovation Council Fund, Redstone Quantum Fund, and Indi Partners. Multiverse computing is an industry leader in quantum and quantum-inspired computing methods. The raised funding will enable Multiverse to continue developing the quantum and quantum-inspired algorithms that power its flagship product, Singularity, and the recently launched LLM compressor, CompactifAI. Singularity is a solution that harnesses the power of quantum and tensor network algorithms so users with no background in quantum computing can deploy it into several applications and optimization problems in industries as diverse as finance, energy, manufacturing, life science, cybersecurity, and defense.

Lakmoos raised 300K EUR in pre-seed funds to simulate target group decision-making

The AI martech startup Lakmoos secured funding from Presto Ventures to build data models that simulate an organization's target group and provide instant market research. Lakmoos began its journey by mapping the market research process in 20 different-sized companies, from Packeta to Microsoft. The startup found that every organization devoted more than half of the time allocated for research on administrative tasks unrelated to interactions with data or participants. The company came up with a solution that replaces human respondents with data models designed to reflect the dominant opinion of the client's target group. Lakmoos' proprietary models combine publicly available, partner, and proprietary data to design models that instantly answer business-related questions. Since Lakmoos' models are layered, the top layer of each model is the only one customized to the client's specifications. Thus, their proprietary data always stays with them, assuring them that their data will not be used to train competitors' models. Lakmoos plans to direct the investment into scaling their sales with test kits for the banking, automotive, telco, energy, and healthcare industries.

Snowflake announced a global partnership with Mistral AI to incorporate their models into the Snowflake Data Cloud

The multi-year partnership includes an investment by Snowflake Ventures into Mistral's Series A and the availability of the Mistral models on Snowflake Cortex, Snowflake's solution to help clients integrate generative AI into their businesses to support several use cases, from sentiment analysis, translation, to assistants and chatbots. Snowflake Cortex LLM Functions is now in public preview to enable any user with SQL skills to leverage small LLMs to address specific sentiment analysis, translation, and summarization tasks. Python developers can handle complex applications, including RAG and chatbots within Streamlit in Snowflake. The partnership with Mistral and the development of Snowflake Cortex reflect the company's commitment to bring AI to businesses of all sizes. Moreover, Snowflake has pledged to fulfill this commitment in an open, safe, and responsible way by joining the AI Alliance.

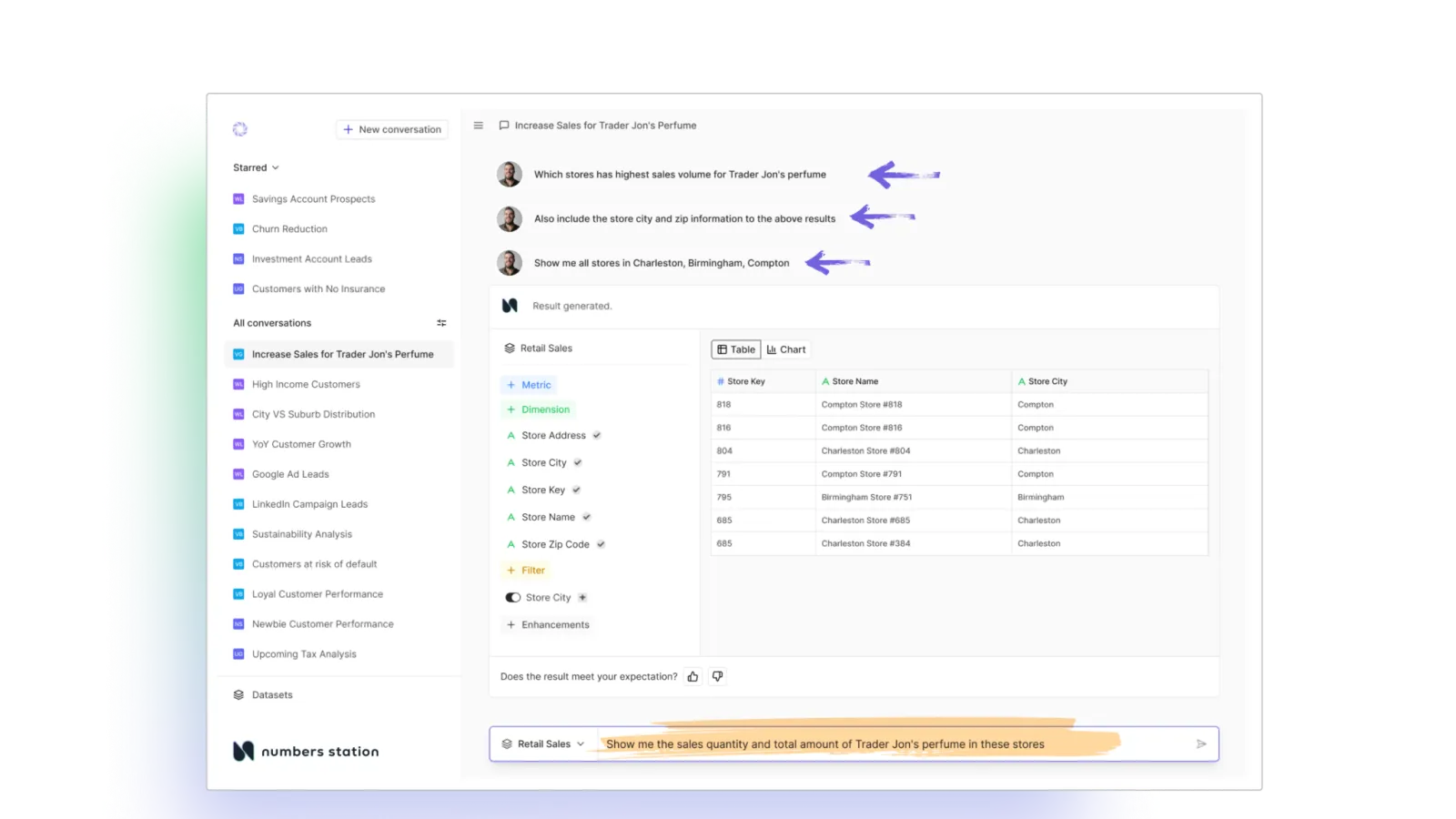

Numbers Station launched Numbers Station Cloud to make its enterprise product accessible to organizations of all sizes

The full-stack platform lets users discover and verify hypotheses using their business data via a natural language chat interface enriched with business data and metrics summarization capabilities and memory. Numbers Station also provides transparently trustworthy and accurate AI-generated responses with an explanation so users can assess whether they are correct. Its technology also accounts for users' intent and imprecision, handling the vagueness of some queries by learning from other users and asking for clarifications when needed. Finally, the auto-generated semantics evolve alongside the users' data. When Numbers Station recognizes that there has been a change in the dataset, it adapts by updating its semantic catalog and learning from users' feedback. Numbers Station has opened registrations for prospective users on the Numbers Station Cloud waitlist.

Cohere and Accenture announce collaboration to assist in scaling generative AI enterprise use

The partnership will enable Accenture to deliver solutions powered by Cohere's Command and Embed models and its Rerank search tool. The Command models excel at summarization and chat applications, while the Embed model supports search and RAG applications. Rerank performs semantic searches so users can obtain relevant and accurate results. In a successful early project, Cohere's Command model now powers Accenture's knowledge agent for its Finance and Treasury departments. The Command model enables the knowledge agent to summarize key financial insights before they are delivered to the teams via customized alerts and notifications. The partnership is expected to produce data security and privacy-compliant enterprise-grade AI solutions that clients can customize to address their needs, leveraging Cohere's fine-tunable models and proprietary datasets in parallel with Accenture's infrastructure, data and industry expertise.

OpenAI had another tumultuous week

- The company published a reply to Elon Musk's lawsuit claims: In addition to detailing how OpenAI is working to develop AGI that benefits humanity and revealing some facts about its relationship with Musk, OpenAI clearly stated its intent to dismiss all of Musk's claims. The revealed details are pretty straightforward and backed by appended email screenshots: both OpenAI and Musk soon realized that the financial and computing resources needed to fulfill OpenAI's mission far exceeded the amounts that could be reasonably raised through a non-profit. As the for-profit structure was discussed, Musk wanted OpenAI to merge with Tesla or have exclusive control over the company, with OpenAI's denial leading to Musk's departure. The reply also makes a case for OpenAI's technology and products being broadly available enough. The reply concludes by stating that Elon Musk was fully aware that the goal was not to open-source AGI.

- The WilmerHale review into Sam Altman has officially concluded; Altman and Greg Brockman will continue to lead OpenAI: After performing several interviews with former board members, current executives, advisors to the prior OpenAI Board, and other relevant witnesses and reviewing thousands of documents and corporate actions, WilmerHale developed a record that was taken into consideration by the current Board in parallel with the recommendation of the Special Committee, with the result that the Board unanimously ratified Sam Altman and Greg Brockman to keep leading OpenAI. The key finding in the WilmerHale review is that the former Board's public statement truthfully recounted its decisions and rationale and that the Board at the time believed that the actions of November 17 were the best course of action and could not have anticipated the events that followed. Finally, the current OpenAI Board announced improvements to OpenAI's governance structure, including strengthening OpenAI's Conflict of Interest Policy, introducing a new set of corporate guidelines, creating a whistleblower hotline, and creating additional Board committees.

- The OpenAI Board appointed three new members in addition to Sam Altman's reinstatement: Dr. Sue Desmond-Hellmann, Nicole Seligman, and Fidji Simo joined the OpenAI Board of Directors, where they will contribute their expertise in leading global organizations and negotiating complicated regulatory landscapes. Dr. Desmond-Hellmann is a physician serving on the Pfizer and the President's Council of Advisors on Science and Technology Boards. She also has a background in non-profit governance, as she served as Chief Executive Officer of the Bill & Melinda Gates Foundation from 2014 to 2020. Nicole Seligman is a renowned lawyer and corporate and civic leader, serving on the Paramount Global, MeiraGTx Holdings PLC, and Intuitive Machines, Inc. corporate boards. She also holds leadership roles at Schwarzman Animal Medical Center and The Doe Fund in New York City. Fidji Simo is the Chief Executive Officer and Chair of Instacart and a Shopify Board of Directors member. She is also the founder of the Metrodora Institute, a research center and medical clinic that focuses on treating and managing neuroimmune axis disorders. Simo is also the President of the Metrodora Foundation.

Ema is coming out of stealth to build an AI employee that can handle mundane tasks

Ema (Enterprise Machine Assistant) lets users activate specialized Personas to help their human counterparts automate their workflows. The Personas operate on Ema's patent-pending Generative Workflow Engine, which uses language prediction to map workflows from natural language conversations. Ema's Standard Personas include roles such as Customer Service Specialist (CX), Employee Assistant (EX), Data Analyst, and Sales Assistant. Ema also allows the creation of Personalized Personas tailored to the user's particular workflows. Ema is powered by a proprietary "fusion of experts" model, EmaFusion, exceeding 2 trillion parameters in size. EmaFusion combines industry-leading open and proprietary models, including Claude, Gemini, Mistral, Llama2, GPT4, GPT3.5, and Ema's proprietary models to deliver cost-effective, accurate performance. Ema is available via demo request.

Plagiarism detection platform Turnitin and fraud detection platform Inscribe have cut their staff

Last year, Turnitin CEO Chris Caren warned that AI would enable the company to reduce its headcount by 20%. Now, the company is carrying out a small 15-person layoff round, which would seem trivial, given the company's 900-person workforce, were it not for the fact that it is taking place shortly after Caren's comments. While speaking at a 2023 event, Caren stated that he believed the company would only require about 20% of its current engineering staff and that future engineering, sales, and marketing hires would be done right out of high school rather than after college. Following Turnitin's layoffs, some sources made public that Inscribe's Board recommended the company reduce its headcount, as the market status had kept it from reaching its revenue goals for the year. Inscribe confirmed the under 40% reduction in its workforce, citing the market uncertainty, higher interest rates, and advances in AI as the catalysts for the shift in strategy.

Cognizant revealed its new advanced AI lab

Cognizant's Advanced AI Lab will drive the research and development of AI-related intellectual property and technologies. It will also collaborate with external stakeholders to engage in projects, including AI-for-good research, peer-reviewed research, and providing AI training programs. Led by Babak Hodjat and supervised by University of Texas at Austin AI Professor Risto Miikkulainen, the lab has already published 14 papers, including the award-winning Discovering Effective Policies for Land-Use Planning. The lab also holds 75 issued and pending patents, which it hopes will serve as a foundation to build cutting-edge AI-powered solutions. Cognizant is committed to maintaining a high standard around safety, security, privacy, transparency, and inclusion. In partnership with Oxford Economics, Cognizant published "New World, New Work," a study on the economic impact of AI, and is planning to release further research on AI's impact on areas such as productivity, tasks, and occupations. In parallel to the Advanced AI Lab launch, Cognizant supports AI adoption through its AI Innovation Studios to establish itself at the forefront of innovative, business-driven AI solutions.

Inflection launched the upgraded and competitive Inflection-2.5 model

The launch of Inflection-2.5 follows last November's release of Inflection-2, "the second-best LLM in the world at the time," and the personal AI assistant Pi launch last May. Inflection-2.5 competes with the renowned GPT-4 and Gemini models, achieving performance on par with GPT-4, albeit using only 40% of the training compute. Inflection-2.5 also features improvements in coding and mathematics, which impact its performance in popular industry benchmarks. Pi also features real-time web search capabilities, enabling users to access up-to-date information. The Inflection-2.5 rollout has boosted Pi's user sentiment, engagement, and retention. According to Inflection, Pi sees a million daily and 6 million monthly users, and Pi has exchanged over four billion messages with them. Average conversations with Pi last 33 minutes; 10% last over an hour. Inflection also claims that 60% of its weekly users return the next week, which yields higher stickiness rates than its competitors.

Sign up for Data Phoenix

Data Phoenix is your best friend in learning and growing in the data world! We publish digest, organize events and help expand the frontiers of your knowledge in ML, CV, NLP, and other aspects of AI.

No spam. Unsubscribe anytime.

Brevian is coming out of stealth to make it easier for business users to build custom AI agents

Brevian's no-code platform for building enterprise AI agents is initially geared toward support teams and security analysts, although it has plans to expand to other areas. Founded by Vinay Wagh and Ram Swaminathan, Brevian is emerging from stealth after securing $9 million in seed funding led by Felicis. Although the challenges around data security have been widely publicized, the team at Brevian realized the more relevant challenge was building systems that solve real enterprise problems. Their vision is that enterprise users should be able to simplify their daily tasks by creating AI agents tailored to their needs. Brevian plans to invest the funds to accelerate its product development and expand its team to keep up with the demand created by its early release program.

RagaAI released the RagaAI LLM Hub as an open-source package for the AI community

The package includes over a hundred carefully curated evaluation metrics and guardrails, yielding one of the most comprehensive tools for LLM and RAG application performance diagnostics and evaluation. The RagaAI LLM Hub aims to help developers identify and fix issues throughout the LLM lifecycle. The test suite in the RagaAI LLM Hub tests every aspect of LLM application building, from content quality, hallucinations, and relevance to safety and bias, guardrails, and vulnerability scanning. The package also includes several metrics-based tests for a more profound analysis. The RagaAI LLM Hub is also available in an enterprise version featuring scalability, on-prem deployment, and dedicated support. More details on the RagaAI LLM Hub are available at RagaAI's site, docs, and GitHub. Finally, in parallel with the RagaAI LLM Hub open-sourcing announcement, RagaAI revealed it will host a hackathon featuring a sizeable prize pool plus internship and job opportunities for winning teams. Those interested in participating can register here.

Salesforce has announced Einstein 1 Studio, a suite of low-code tools to customize Einstein Copilot

Einstein 1 Studio consists of three low-code tools enabling users to customize different aspects of Einstein Copilot, the conversational AI assistant for CRM. Copilot Builder (currently in global beta) helps users create custom AI actions by combining existing tools with prompts and other novel components. Einstein Copilot then leverages these custom actions to perform tasks. Prompt Builder (generally available) simplifies the creation of reusable AI prompts requiring no code. Finally, using Model Builder (also generally available), users can connect with models managed by Salesforce and its partners or bring their models to train and fine-tune on specific domains. Salesforce has also announced the Einstein Trust Layer, a collection of features ensuring security compliance. The Trust Layer includes customer-configured data masking and the ability to store audit trails and feedback data on the Data Cloud for later use. Salesforce is also launching a series of courses on Einstein Copilot and Prompt Builder to help users sharpen their AI skills. These offerings align with Salesforce's vision of delivering AI-augmented Cloud solutions that are trusted, secure, and tailored to each customer's needs.

Baseten announced its successful $40M Series B round

Baseten is working to build "the most performant, scalable, and reliable way to run your machine learning workloads," whether on a private or a hosted cloud service. During the last year, Baseten hosted machine learning inference loads for industry-leading customers such as Descript, Picnic Health, Writer, Patreon, Loop, and Robust Intelligence. The company enables developers to focus on building AI-powered products without worrying about building the infrastructure. As a leading infrastructure provider, Baseten has recently launched multi-cloud support and runtime integrations (such as TensorRT). It has also developed partnerships with AWS and GCP to ensure customers can easily access the required hardware for their workloads. The $40 million raised in Series B, led by IVP and Spark with the participation of existing investors Greylock, South Park Commons, Lachy Groom, and Base Case, will enable Baseten to add more clouds, a new orchestration layer, and an optimization engine to its service and it will also allow the expansion of Baseten's product line.

Zapier's acquisition of Vowel was instrumental to the launch of Zapier Central

The workflow automation platform Zapier announced its acquisition of the team behind Vowel, an AI-powered tool for videoconferencing. As a result of the deal, Vowel CEO Andrew Berman is taking on the role of Director of AI at Zapier. The acquisition of the Vowel team proved essential for the development of Zapier Central, the company's latest offering. Available in public preview, the AI workspace enables customers to build, teach, and work alongside customized AI bots capable of handling tasks across over 6000 applications. According to Zapier, over 388,00 customers have used the company's solutions and integrations to automate over 50 million tasks. The acquisition of Vowel and the launch of Zapier Central are a continuation of Zapier's commitment to making AI accessible to its customers, regardless of their technical expertise or the size of their organizations.

Comments