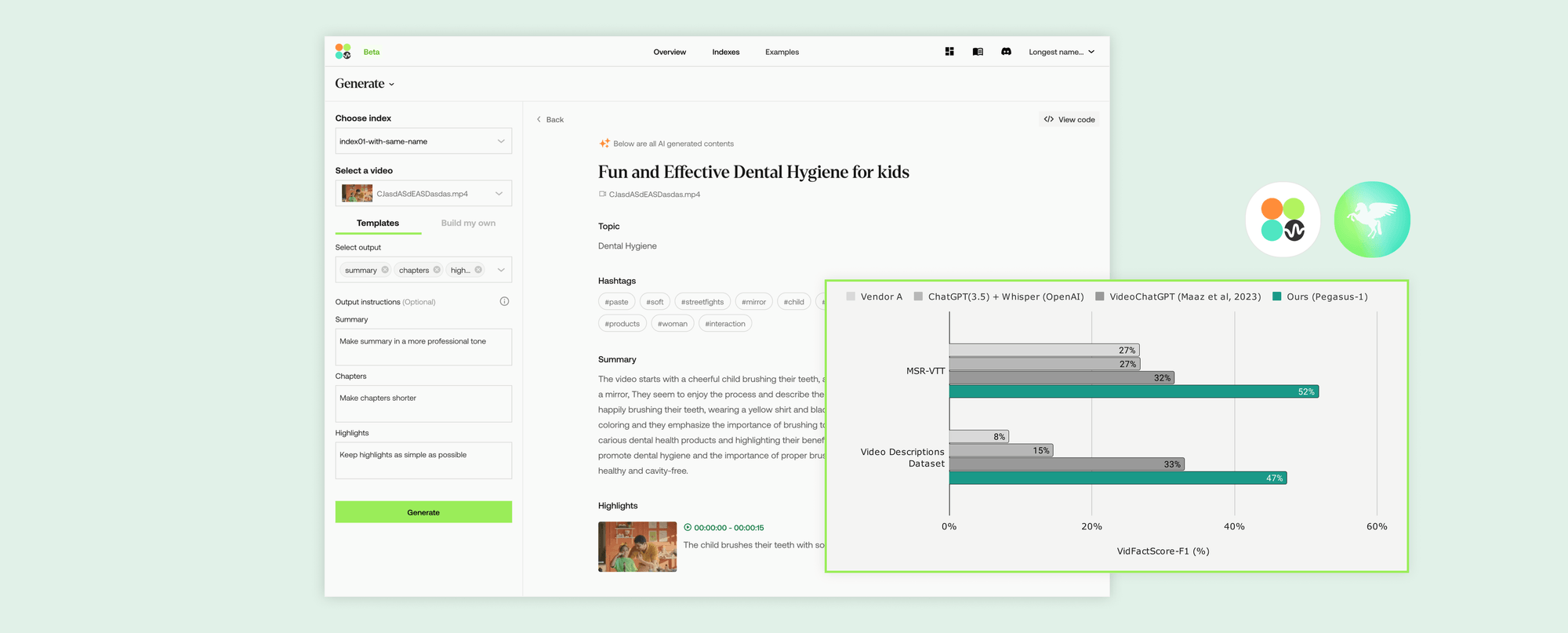

Twelve Labs recently revealed the capabilities of its latest foundation text-to-video model, Pegasus-1. The model will be jointly available with a suite of video-to-text APIs: Gist, Summary, and Generate. The Gist API has the identification of titles, topics, and related hashtags as its principal task. The Summary API delivers summarization and classification tasks such as chapters and highlights. Finally, the Generate API is a text generator that creates relevant pieces following users' prompts. Gist and Summary are pre-loaded with the necessary prompts to perform their tasks, so they work out of the box. Generate is configured to respond to formatting and styling requests, delivering anything from reports to bullet points and even lyrics.

Pegasus-1 is the culmination of Twelve Labs' "Video-First" approach. Many state-of-the-art models treat video-to-text as an image or speech recognition problem. A pervasive limitation of image understanding techniques is the low threshold for the maximum size of the input. These approaches usually reduce video understanding to training on a selection of video frames and text embeddings. Thus, longer video files require increasingly large amounts of image and text embeddings for the model to process. This can result in an impractical method that will ignore all the audio data included in video files. On the other hand, treating video-to-text as an audio-to-text problem reduces the solution to a transcription method that overlooks the multimodal nature of video and leaves out valuable video and audio data when generating outputs.

Pegasus-1 is built from three components: a video encoder, a video-language alignment model, and a large language model/decoder. The encoder receives video as input and returns video embeddings that incorporate visual, audio, and speech information. Then, the video-language alignment model extends the LLM domain with the embeddings, ensuring the model comprehends the embeddings just like the text tokens. Finally, the LLM interprets the newly acquired video embeddings following the user's prompts and presents this information as coherent, readable text.

According to the announcement, Pegasus-1 boasts an aggregate of around 80B parameters. It was trained using a 35M subset of a collection of over 300M video-text pairs (termed TL-35M) and a selection of approximately 1B images. The company expects to complete training on a 100M subset, TL-100M. Twelve Labs is also contemplating open-sourcing a smaller dataset to support research efforts (they are currently accepting expressions of interest at research@twelvelabs.io). To enhance the instruction-following capabilities of the model, it underwent fine-tuning using a high-quality video-to-text dataset. The dataset prioritizes diversity of domains, comprehensiveness, and precision in text annotation.

Annotations on the Twelve Labs dataset were noted to be longer than those on the closest open-source alternative, and annotations underwent several rounds of verification and correction to guarantee the highest level of accuracy possible. Although this did raise the cost per unit, Twelve Labs prioritized quality over simply increasing the size of the dataset.

As has become standard, Twelve Labs notes that their model is not exempt from hallucinations, generation of irrelevant (and potentially unsafe) content, and biases. They also note that while the API supports media ranging from 15 seconds to an hour, the Preview is limited to 30 minutes. Regardless, optimal performance is achieved at a 5-15 minute range. Still, with all its current limitations and the pending work that they entail, Twelve Labs has achieved no small feat with Pegasus-1 and its video-to-text APIs.

The complete overview of results, metrics, examples, and guidelines is accessible here. Preview access to Generate is available, as is the evaluation codebase and API documentation.

Comments