Elon Musk's xAI unveiled its latest flagship AI model, Grok 4, alongside a new $300-per-month "SuperGrok Heavy" subscription tier. SuperGrok Heavy is not only the most expensive tier in xAI's subscription plan, but it is also the priciest AI subscription among major providers.

The launch introduces two variants: standard Grok 4 and Grok 4 Heavy, a "multi-agent version" that deploys multiple AI agents to collaborate on solving complex problems. Musk confidently claimed during the live stream introducing Grok 4 last Wednesday that "[w]ith respect to academic questions, Grok 4 is better than PhD level in every subject, no exceptions", but he also acknowledged the model may lack common sense at times.

The claim about Grok 4's performance on academic questions seems to be mostly based on benchmark scores, which have been subject to criticism more than once, as they tend to test for esoteric knowledge, aren't a reliable indicator of proficiency at tasks, and are often self-reported with no indication of how to reproduce the obtained results.

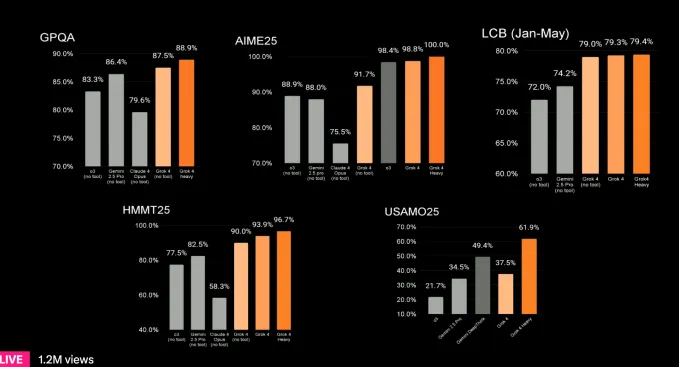

Still, xAI is right to point out that, in these tests, Grok 4 performs significantly better than the best available systems. For instance, Grok 4 (no tool access) achieved a 25.4% score on Humanity’s Last Exam, outperforming Google’s Gemini 2.5 Pro, which scored 21.6%, and OpenAI’s o3 (high), which scored 21%. Similarly, Grok has a reported 16.2% score on the ARC-AGI-2 test, nearly doubling the score of Claude 4 Opus, the highest scoring model up to the Grok 4 launch.

As for the "SuperGrok Heavy" subscription, xAI stated it will let paying customers use an early Grok 4 Heavy preview, as well as provide early access to new features. The company plans to roll out additional products through the year, including an AI coding model in August and a video-generation model in October.

Despite strong benchmark performance, xAI faces challenges in positioning Grok as a business-ready alternative to established competitors, like ChatGPT and Claude. Unfortunately, the Grok 4 launch comes amid controversy, following incidents where Grok's automated X account posted antisemitic content, raising questions about the model's safety guardrails. xAI has since issued an apology for the incidents, claiming that they were caused by Grok's system prompts, which have since been updated to prevent any more 'problematic' behavior.

Even more worryingly, a user has recently shared that they were able to obtain detailed instructions for synthesizing several illicit substances, including two nerve agents and fentanyl, as well as detailed information on other sensitive topics (such as committing suicide and building nuclear weapons), without leveraging any sophisticated jailbreaking techniques. Relatedly, NeuralTrust, a security platform for generative AI, reportedly jailbroke the model within 48 hours of its release.

Comments