What do developers want the most in 2023? Is it better documentation or different tools?

Take part in the new Developer Nation survey and shape the ecosystem! For every survey response, Developer Nation will donate to one of the charities of respondents’ choosing. Hurry up, the survey is open until February 12! Start here.

VIDEOS

The promising role of synthetic data to enable responsible innovation

Video recording of our webinar about synthetic data and how they help to enable responsible innovation by Shalini Kurapati.

If you have interesting topics or projects that you would like to share with the world in our webinars, you can submit them here.

Let's build GPT: from scratch, in code, spelled out.

In this video, Andrej Karpathy demonstrates how to build a Generatively Pretrained Transformer (GPT), following the paper "Attention is All You Need" and OpenAI's GPT-2 / GPT-3, and much more. Make sure that you watch at least parts of it!

ARTICLES

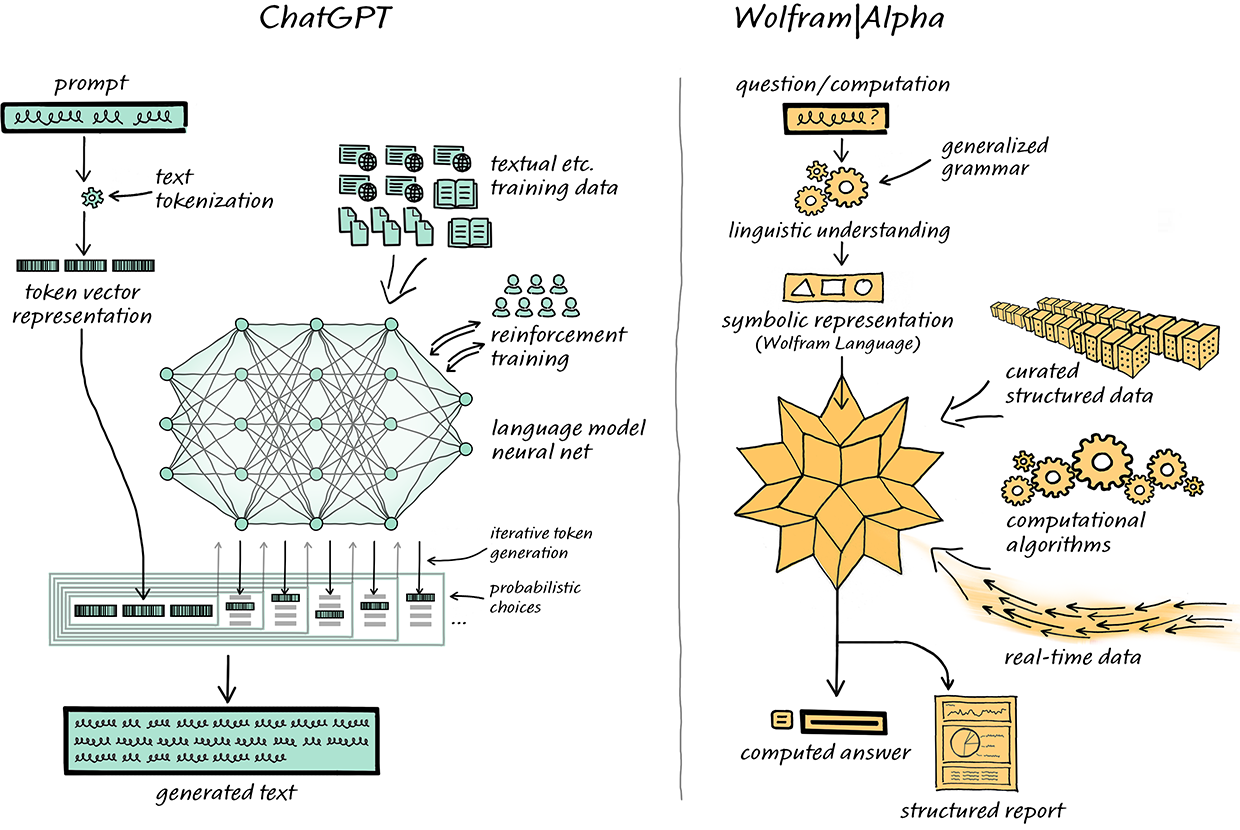

Wolfram|Alpha as the Way to Bring Computational Knowledge Superpowers to ChatGPT

ChatGPT is a remarkable achievement, but not everything that’s useful to do is quite “human like”. This article explores how to combine it with Wolfram|Alpha to make something much human-like and stronger than either could ever achieve on their own.

A Jupyter Kernel for GNU Octave

GNU Octave is a long-standing member of the scientific computing ecosystem. It is a natural candidate for high-quality integration in the Jupyter ecosystem. This article presents the xeus-octave project, a Jupyter kernel for GNU Octave. Check it out!

ML Collaboration: Best Practices From 4 ML Teams

This article offers a sneak peek of what it takes to build robust, scalable, and live ML production systems. Three key takeaways from it are: 1) Efficient ML implementation needs culture; 2) Choose skills over job titles; 3) Prioritize data at all costs. Check it out for more!

Create Amazon SageMaker models using the PyTorch Model Zoo

In this blog post, the authors showcased an end-to-end example of performing ML inference using an object detection model from the PyTorch Model Zoo using SageMaker batch transform.

Self-Serve Feature Platforms: Architectures and APIs

The last few years saw the maturation of feature platforms. A feature platform handles feature engineering, feature computation, and serving computed features for models to use to generate predictions. In this article, you will learn more about them.

Large Transformer Model Inference Optimization

Large transformer models are mainstream nowadays, yet it is so hard to run inference for large transformer models. In this article, the authors will look into several approaches for making transformer inference more efficient. Learn more!

How to Use DagsHub with PyCaret

DagsHub’s new integration with PyCaret allows Pycaret users to log metrics, parameters, and data to DagsHub's remote servers using MLflow, DVC, and DDA. This article covers how to use DagsHub Logger with PyCaret and log experiment to DagsHub.

PAPERS & PROJECTS

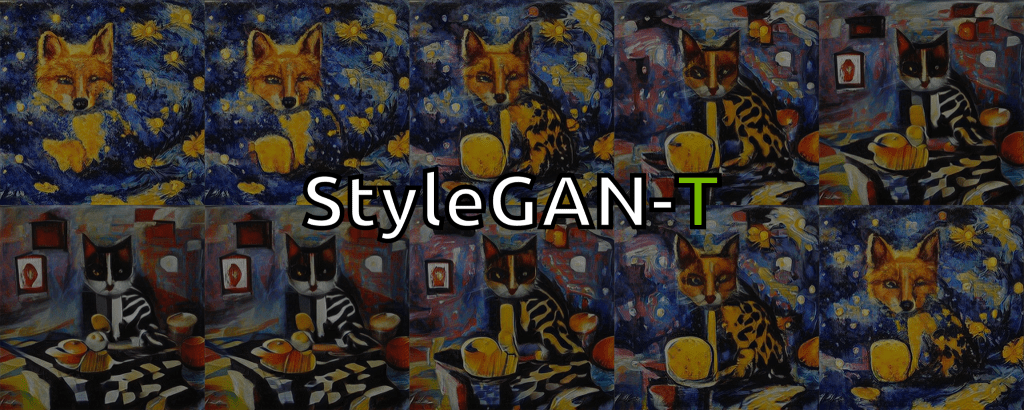

StyleGAN-T: Unlocking the Power of GANs for Fast Large-Scale Text-to-Image Synthesis

StyleGAN-T addresses the specific requirements of large-scale text-to-image synthesis to significantly improve over previous GANs and outperform distilled diffusion models in terms of sample quality and speed. Learn more about the model!

HyperReel: High-Fidelity 6-DoF Video with Ray-Conditioned Sampling

HyperReel is a novel 6-DoF video representation that is unique compared to other approaches in that it both accelerates volume rendering and improves rendering quality, especially for challenging view dependent scenes. Find out more about it!

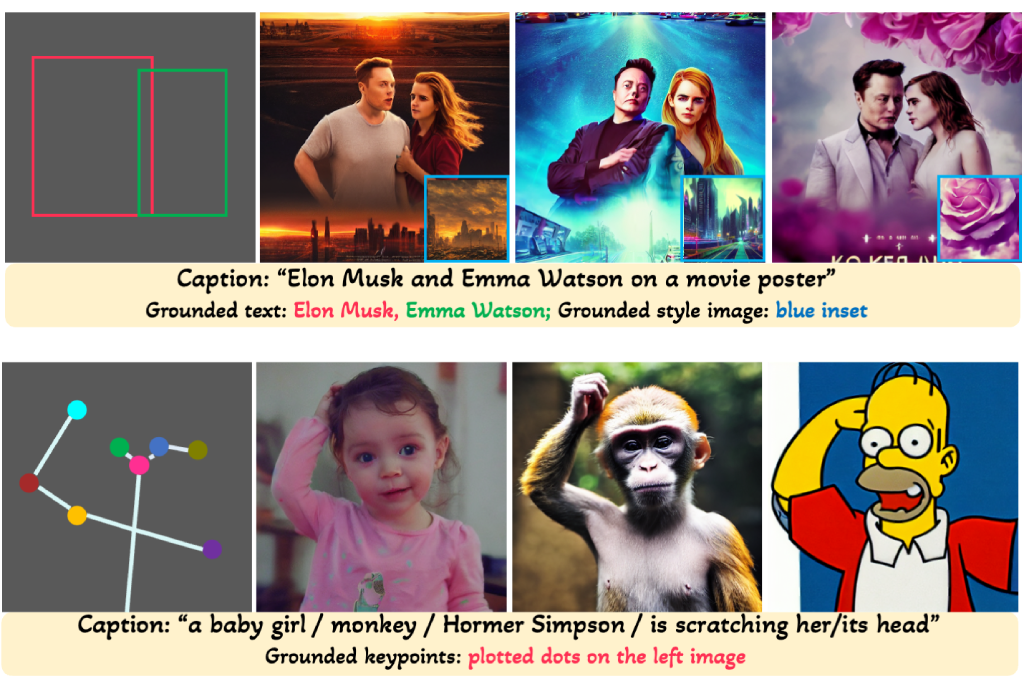

GLIGEN: Open-Set Grounded Text-to-Image Generation

GLIGEN (Grounded-Language-to-Image Generation) is a novel approach that builds upon and extends the functionality of existing pre-trained text-to-image diffusion models by enabling them to also be conditioned on grounding inputs. Take a closer look!

YOLOv6 v3.0: A Full-Scale Reloading

In the latest release, YOLOv6-N hits 37.5% AP on the COCO dataset at a throughput of 1187 FPS tested with an NVIDIA Tesla T4 GPU. YOLOv6-S strikes 45.0% AP at 484 FPS, outperforming other mainstream detectors at the same scale. Learn more!

Generative Time Series Forecasting with Diffusion, Denoise, and Disentanglement

This research proposes a coupled diffusion probabilistic model to augment the time series data without increasing the aleatoric uncertainty and implement a more tractable inference process with BVAE. D3VAE outperforms competitive algorithms with remarkable margins.

Designing BERT for Convolutional Networks: Sparse and Hierarchical Masked Modeling

The proposed method, called Sparse masKed modeling (SparK), is general: it can be used directly on any convolutional model without backbone modifications. The authors validate it on both classical (ResNet) and modern (ConvNeXt) models. Learn more!

Mastering Diverse Domains Through World Models

DreamerV3 is a general and scalable algorithm based on world models that outperforms previous approaches. DreamerV3 is the first algorithm that collects diamonds in Minecraft without human demonstrations or manually-crafted curricula.

BOOKS

Multimodal Deep Learning

This book explores the breakthroughs in the methodologies used in Natural Language Processing (NLP) and Computer Vision (CV). It presents a solid overview of the field, starting with the current state-of-the-art approaches in the two subfields of Deep Learning.

If you enjoyed this content, make sure to subscribe to our newsletter and share it with others who may be interested. Follow us on social networks (Telegram, Facebook, Twitter, LinkedIn, YouTube) to stay updated about the upcoming webinars and have more interesting content.

For collaboration inquiries, including sponsoring one of the future newsletter issues, please contact us here.

Comments