Based on the findings indicating that compound AI systems frequently offer superior performance than standalone foundation models, Databricks has updated its recently acquired Mosaic AI platform to support the end-to-end process for constructing compound AI systems. The tools introduced are divided into three sets roughly corresponding to the stages in the development of any AI system: building and deployment, evaluation, and governance.

To support model building and deployment, Databricks announced several new tools including Mosaic AI Model Training, which enables users to perform training tasks that range from fine-tuning a small model using a proprietary data set to pre-training a large model with trillions of tokens. Shutterstock partnered with Databricks to build ImageAI, a new text-to-image model trained solely on Shutterstock's archive with Mosaic AI Model Training to generate high-quality images appropriate for business contexts. Mosaic AI Vector Search with Customer Managed Keys is now generally available, as are the Mosaic AI Model Serving support for Agents and the Foundation Model API. Lastly, Databricks also announced the public preview of its Mosaic AI Agent Framework and the upcoming availability of the Mosaic AI Tool Catalog and Model Serving support for Function-Calling.

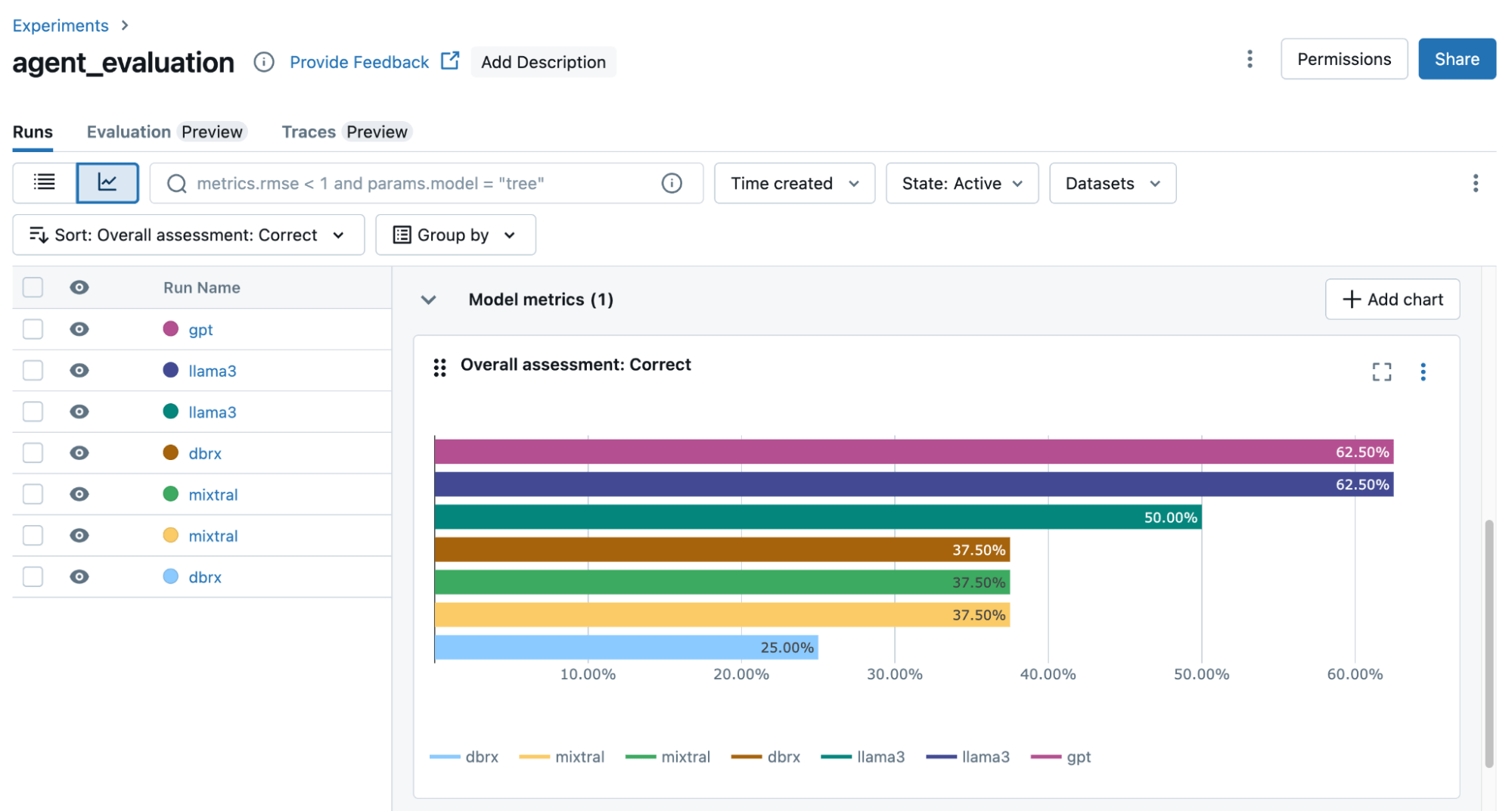

The new Mosaic AI evaluation features ensure that models are not only optimized for benchmarks, but perform as expected in the tasks they were designed to do. To this end, Databricks introduced Mosaic AI Agent Evaluation for Automated and Human Assessments and MLflow 2.14. Agent Evaluation allows users to define high-quality examples of successful interactions, and then experiment with system modifications to see how they impact the quality of the output with respect to the set examples. Agent Evaluation also enables users to invite subject matter experts to perform additional quality assessments that can contribute to the creation of an extended evaluation dataset. In parallel, automated system-provided judges can grade model responses on common criteria such as helpfulness and accuracy. MLflow, a vendor-agnostic framework for model and AI systems evaluation, is being upgraded with MLFlow Tracing, a feature that lets developers record each inference step to optimize debugging and evaluation data set construction.

Finally, to help organizations face and prevent governance issues such as hitting rate limits, unpredictable cost increases due to models being run on large tables, or data leakages, Databricks announced the Mosaic AI Gateway for centralized AI governance. The AI Gateway sits on top of Model Serving to enforce rate limits, permissions, and credential management for model APIs. The feature also includes a unified interface that enables fast API experimentation to find the model best-suited for a particular use case, and Usage Tracking functionalities, which, as their name states, log the user that makes each API calls. Similarly, Inference Tables capture the data going in and out with each call. Additionally, Mosaic AI Guardrails enable endpoint-level or request-level safety filters that can prevent harmful responses and personally identifying information data leaks. Finally, Unity Catalog includes the system.ai catalog of state-of-the-art open source models supported by the Mosaic AI platform.

These new features and capabilities are set to transform Mosaic AI into a platform with centralized governance tools and an unified interface to train, fine-tune and deploy state-of-the-art-models into robust, secure and scalable AI systems that turn data into actionable insights.

Comments