Phi-3 is a family of small but capable language models that outperform other members in their size class and models in the immediate upwards category in popular benchmarks testing language, coding, and math capabilities. The models' strong performance is mainly due to innovations by Microsoft researchers aimed at developing a process for high-quality data filtration. Once obtained, the synthetic data becomes a key component of the model's training process. The training process for the Phi-3 models is vaguely reminiscent of the one described by Meta in the recent launch of the first two models in the Meta Llama 3 family, albeit at a much smaller scale.

Microsoft's first run consisted of preparing a discrete 3,000-word dataset, equally distributed between nouns, verbs, and adjectives. The research team then asked an LLM to create a short children's story using a noun, a verb, and an adjective from the dataset. This prompt was iterated millions of times to compose the tiny children's stories dataset “TinyStories”, which was used to train models with approximately 10 million parameters. The experiment was sophisticated further by replacing the word list with data filtered for its educational value and asking an LLM to synthesize it as if the model were trying to explain the concepts. The model's outputs were filtered and then fed back to the LLM to refine the dataset until it was simple and diverse enough to train a small model. The resulting dataset came to be known as CodeTextbook.

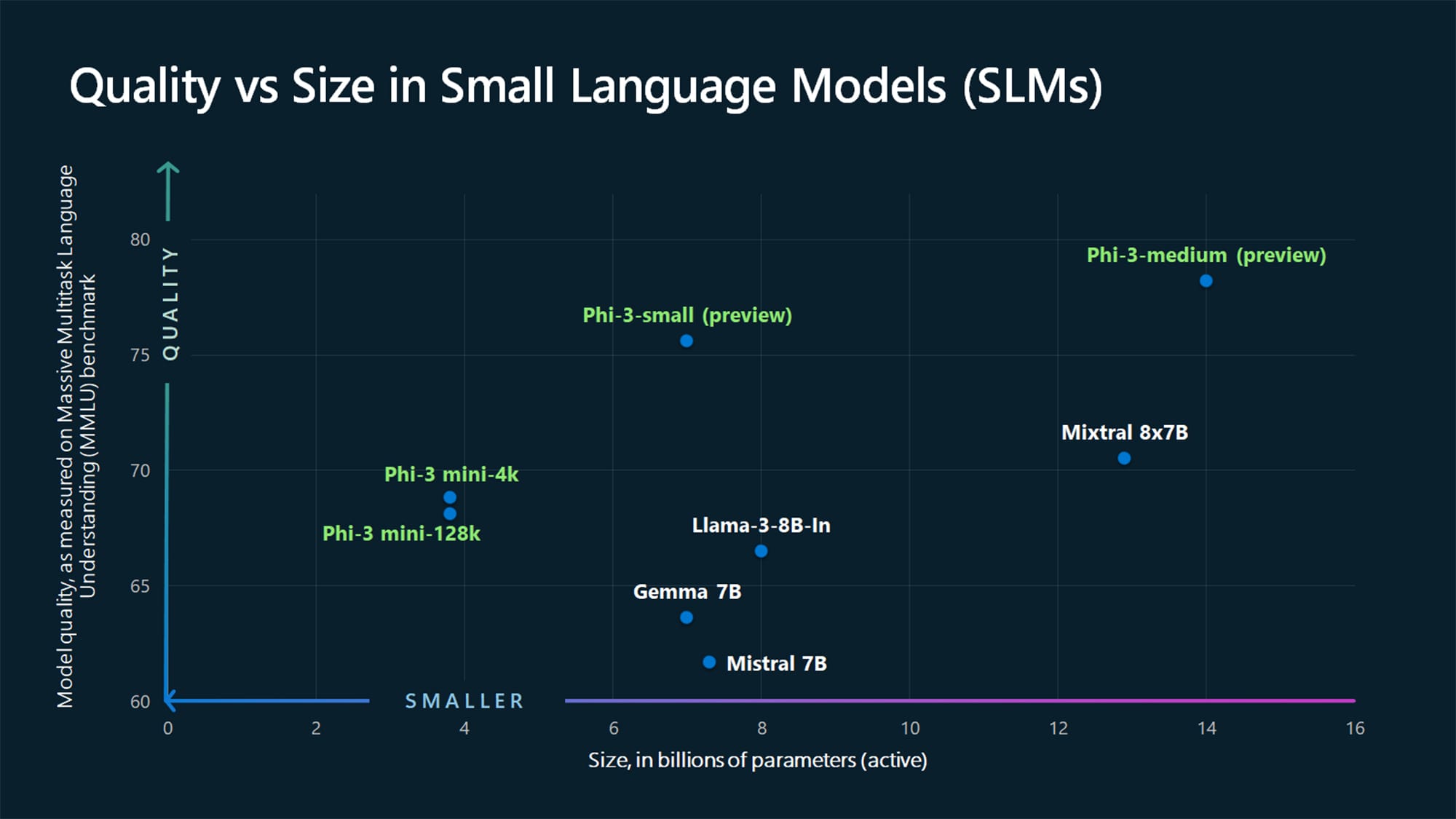

Once the models were trained, additional post-training safety measures including human assessment and red-teaming. Moreover, developers building with the Phi-3 models using Microsoft Azure can leverage the security and trustworthiness-boosting tools on the platform. The first model to be publicly available in the Microsoft Azure AI Model Catalog, Hugging Face, Ollama, and as a NVIDIA NIM microservice is Phi-3-mini, an instruction-tuned 3.8 billion-parameter model in two context length variants, 8K and 128K, without performance impact. Phi-3-mini is optimized for ONNX Runtime with support for Windows DirectML, which means it can run practically anywhere, including mobile devices and offline inference contexts. Microsoft expects Phi-3-mini to be joined by Phi-3-small (7B) and Phi-3-medium (14B) in the coming weeks.

Comments