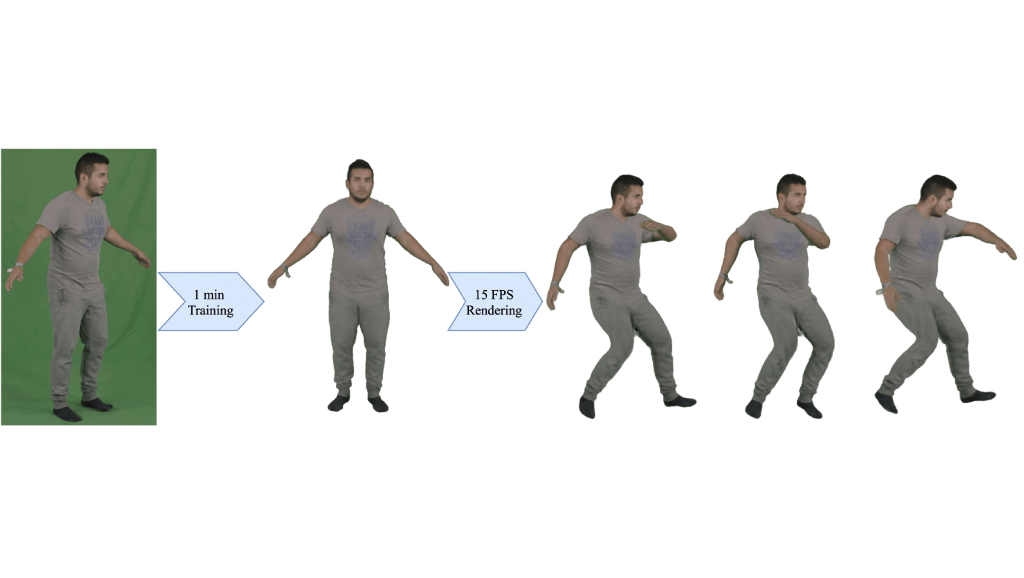

InstantAvatar: Learning Avatars from Monocular Video in 60 Seconds

InstantAvatar is a system that can reconstruct human avatars from a monocular video within seconds, and these avatars can be animated and rendered at an interactive rate. It converges 130x faster and can be trained in minutes instead of hours, way faster than competitors.