The Allen Institute for AI (AI2), a non-profit AI research institute founded in 2014 by the late Microsoft co-founder Paul Allen, has as its mission the development of impactful foundational AI research in the form of large-scale open models, data, and robotics, among other lines of research. Earlier this year, AI2 released the OLMo model family, made available with its full training data; model weights; training code, logs, and metrics; over 500 checkpoints per model; evaluation code; and fine-tuning code.

The availability of these components makes OLMo fully examinable and reproducible, and one of the few models satisfying the Open Source Initiative's definition of open-source models (the same one that upset Meta, since according to said definition, the Llama models are not really open). The same is true of the newly released OLMo-2 family, which features 7B and 13B-parameter base models trained with up to 5T tokens.

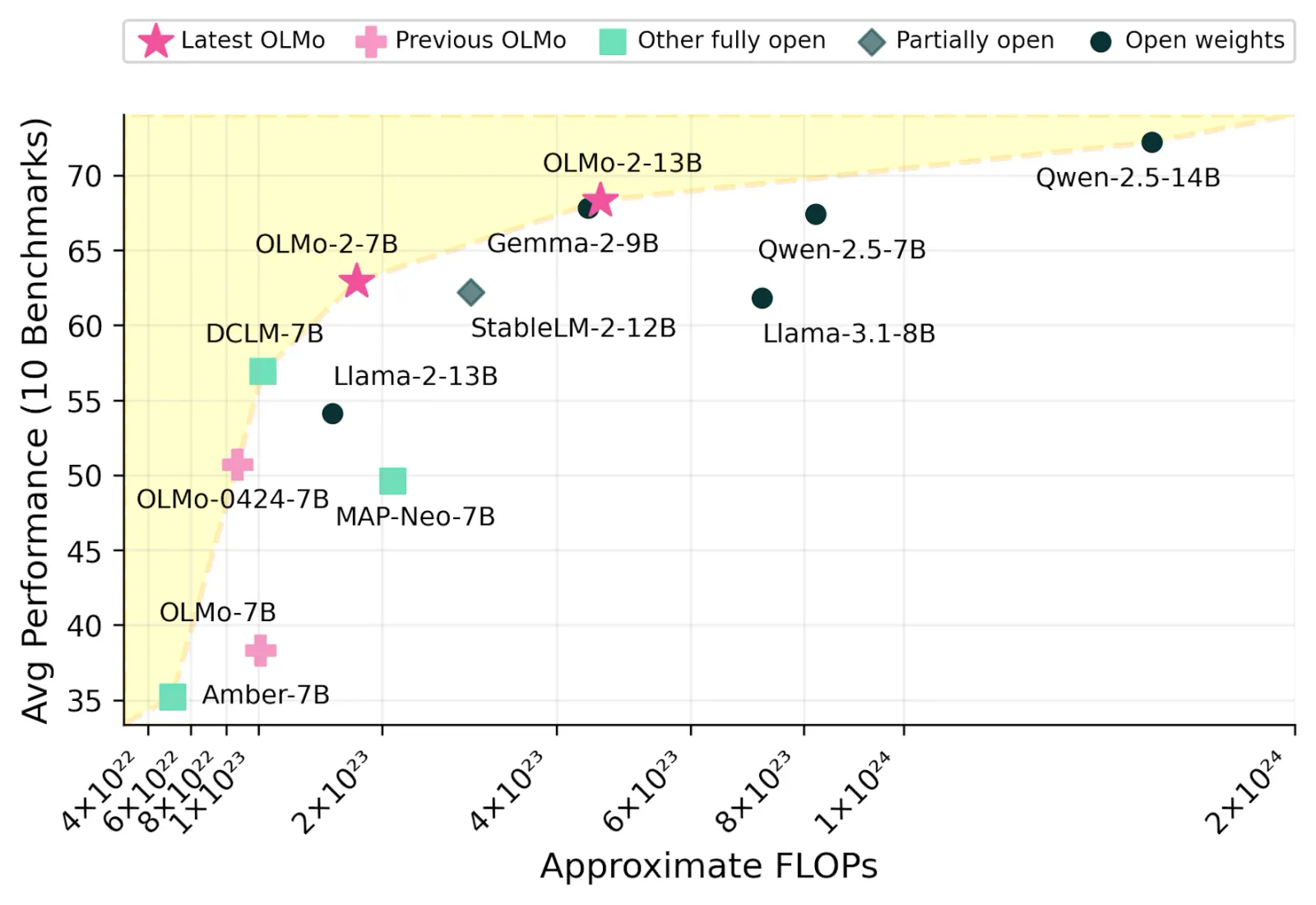

Performance-wise, the OLMo-2 models are comparable to or outperform other fully and partially open-source models. Their performance is also competitive with popular open weights models such as Llama 3.1, Gemma 2 or Qwen 2.5. It should be noted that OLMo 2 7B's average performance across the 10 benchmarks is superior to Llama-3.1 8B, and OLMo 2 13B fares better than Qwen 2.5 7B despite its lower total training FLOPs.

AI2 attributes the successes of OLMo-2 to enhancements to essential aspects of model development, including training stability, interventions during late pre-training (learning rate annealing and data curriculum), state-of-the art post-training recipes, and an actionable evaluation framework. OLMo-2's post-training recipe was taken from the Tülu 3 pipeline released just last week.

Olmo-2's performance provides even more evidence that the Tülu 3 framework will contribute to closing the performance gap between models post-trained using closed source recipes and fully open-source models, and will also serve as a foundation to more open-source research on LLM post-training approaches and techniques. See the blog post for the complete technical overview of OLMo-2's making.

Comments