Every weekend, we bring together a selection of the week's most relevant headlines on AI. Each weekly selection covers startups, market trends, regulation, debates, technology, and other trending topics in the industry.

A brief recap of NVIDIA's GTC 2024

NVIDIA's developer conference, GTC 2024, was held on March 17–21. GTC 2024 featured a keynote by NVIDIA CEO Jensen Huang, which was brimming with announcements, including the introduction of the Blackwell architecture and Superchips, the Omniverse Cloud APIs, and NVIDIA's NIM and CUDA-X microservices. Of these, the introduction of the Blackwell architecture—showcased in the NVIDIA GB200 Grace Blackwell Superchip, the NVIDIA B200 Tensor Core GPUs, and the mind-blowingly massive NVIDIA GB200 NVL72—undoubtedly stole the show. Besides being a groundbreaking achievement by NVIDIA, the introduction of the Blackwell architecture reminds us that the company needs to sell substantial amounts of racks, CPUs, and GPUs for business to remain profitable and that few entities have the purchasing power to embark on such a commitment.

Thankfully for NVIDIA, strategic partners Amazon (AWS), Google, and Microsoft immediately publicized that they would make the Blackwell platform available to their customers. AWS announced that it would make Blackwell GPU-based Amazon EC2 instances available to its customers; it would integrate the AWS Nitro System, Elastic Fabric Adapter encryption, and AWS Key Management Service with Blackwell encryption to deliver end-to-end control and strengthened security for its customers' AI applications; it would build one of the world's fastest supercomputers for NVIDIA's R&D teams featuring 20,736 B200 GPUs connected to 10,368 NVIDIA Grace CPUs. Furthermore, Amazon SageMaker will integrate with the NVIDIA NIM microservices to optimize foundation model performance, including the healthcare and life sciences-specific NIM microservices. Google and Microsoft also confirmed they would incorporate NVIDIA GB200 NVL72 systems into their cloud infrastructure in addition to expanded support and integrations for most of the announced technologies.

NVIDIA partners Oracle and SAP joined in with additional announcements: NVIDIA and SAP have joined forces to develop SAP Business AI. SAP will use NVIDIA's generative AI foundry service to fine-tune LLMs and the new NVIDIA NIM microservices to deploy AI applications. The integrated AI capabilities are expected by the end of 2024. Oracle and NVIDIA have joined forces to combine NVIDIA's full-stack AI platform with Oracle's Enterprise AI to deliver AI infrastructure that can support countries' cultural and economic needs. Oracle will also leverage the NVIDIA GB200 Grace Blackwell Superchip and the NVIDIA Blackwell B200 Tensor Core GPU.

NVIDIA also scattered a few research-advancing announcements among the spotlight-stealing strategic partnership announcements. NVIDIA announced the Earth-2 climate digital twin cloud platform, whose APIs were released as part of the NVIDIA CUDA-X microservices. The digital twin has already found its first wave of use cases, as the Central Weather Administration of Taiwan plans to leverage the Earth-2 APIs to forecast typhoon landfall areas with increased accuracy. The Weather Company will integrate its meteorological data and Weatherverse tools with Omniverse to power the construction of accurate digital twins that facilitate the understanding and observation of the impact of actual weather conditions.

The NVIDIA DRIVE Thor centralized car computer was announced as delivering next-generation vehicles that showcase "feature-rich cockpit capabilities" and "safe and secure highly automated and autonomous driving" due to its integration of the new Blackwell architecture. Leading companies across the transportation sector, including BYD, Hyper, XPENG, Li Auto, ZEEKR, Nuro, Plus, Waabi, and WeRide, have adopted DRIVE Thor to power their future consumer and commercial fleets of diverse vehicles, including electric cars, robotic public transportation units, and autonomous delivery vehicles.

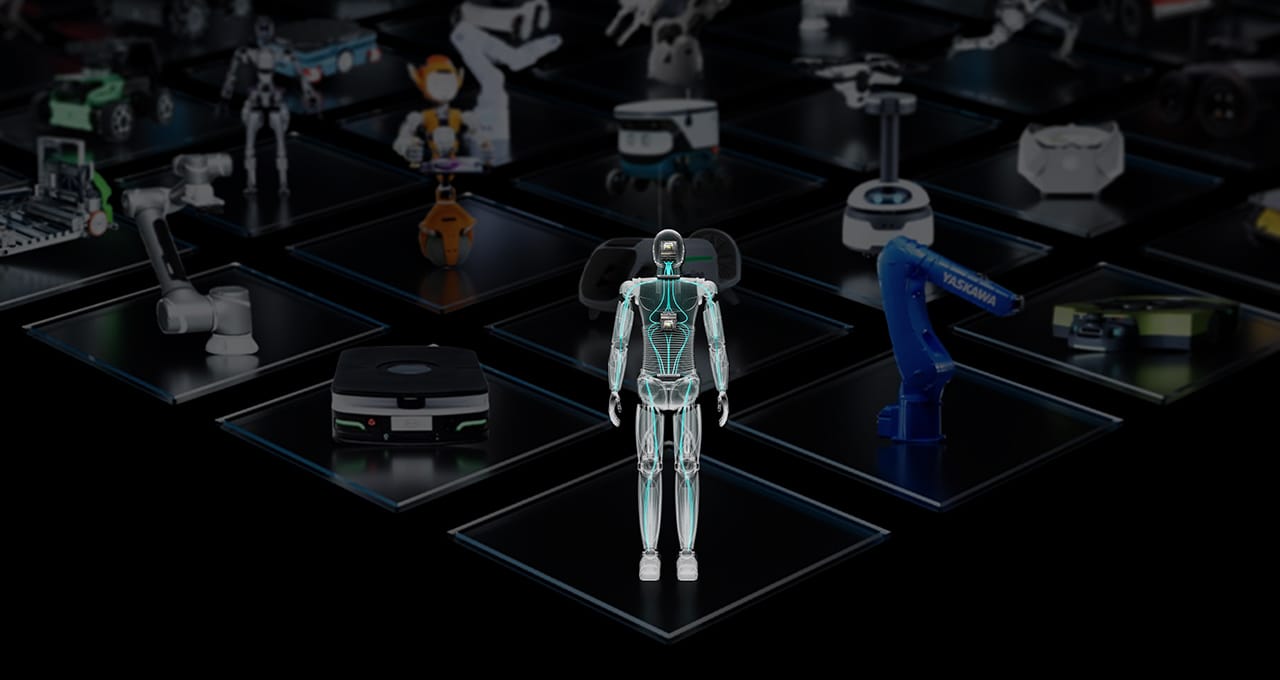

Finally, NVIDIA announced Project GR00T, a general-purpose foundation model for humanoid robots, and critical updates to the Isaac platform, which includes tools for creating new foundation models, such as the reinforcement learning platform Isaac Lab and OSMO, a compute orchestration service. Jetson Thor is one of the most notable parts of the project, a new computer based on the NVIDIA Thor SoC for humanoid robots. According to NVIDIA, its final goal with Project GR00T consists of enabling humanoid robots to understand natural language, emulate human movements, and quickly learn skills like coordination and dexterity to navigate and interact with the real world.

Hot startups Inflection AI and Stability AI lost essential team members this week

Microsoft recently published a blog post featuring CEO Satya Nadella's message to Microsoft's employees announcing the creation of a new entity, Microsoft AI, to be led by Inflection AI co-founder and DeepMind alum Mustafa Suleyman. Suleyman is also expected to join Microsoft's senior leadership team and report directly to Nadella. Fellow Inflection AI co-founder Karén Simonyan will join Microsoft AI as Chief Scientist and report directly to Suleyman. Moreover, several other Inflection employees will join Suleyman and Simonyan at Microsoft AI. A notable consequence is that Microsoft's Copilot, Bing, Edge, and GenAI teams now report to Suleyman. Kevin Scott will remain as Microsoft's CTO and EVP of AI, the division responsible for Microsoft's general AI strategy.

Inflection AI confirmed Suleyman's and Simonyan's departure with an announcement of its own, announcing its pivot to offering its services to developers and enterprises, as well as making its models publicly available via cloud hosting services and a proprietary API, starting with the availability of Inflection-2.5 on Microsoft Azure. Inflection also welcomed Sean White as its new CEO and stated that co-founder Reid Hoffman would remain on the startup's board of directors.

A few days later, Stability AI publicly announced the departure of CEO Emad Mostaque. In addition to stepping down from his role as CEO, Mostaque is also leaving the Stability AI Board of Directors to pursue decentralized AI. As a temporary measure, the board appointed COO Shan Shan Wong and CTO Christian Laforte as interim co-CEOs of Stability AI as it looks for someone to fill the CEO position permanently. Mostaque stated that he was proud of his achievements with the company in the last two years and that he felt sure the company was in good hands. Likewise, Board Chairman Jim O'Shaughnessy represented the board in wishing Mostaque well and reassuring the public that Stability AI was ready for this new stage in its development.

Stability AI launched Stable Video 3D and introduced Image Services on the Stability AI Developer Platform

Stable Video 3D is based on Stable Video Diffusion and delivers 3D views featuring novel angles of objects with improved consistency and quality than the alternatives. The SV3D_u variant generates orbital videos based on a single image without camera conditioning, and the SV3D_p variant accepts single images and orbital views as input to create 3D video along a specified camera path. The key behind SV3D's performance is that it is based on an image-to-video model rather than an image diffusion model. Using Stable Video Diffusion with camera path conditioning resulted in improved consistency and generalization capabilities. The SV3D technical report outlines further details on the models, showcases experimental comparisons, and elaborates on proposals such as implementing dynamic orbit training and a masked score distillation sampling loss function to improve the quality of SV3D's outputs. SV3D is available for commercial use with the Stability AI Membership, while non-commercial users can obtain the weights from Hugging Face and access Stability AI's research paper.

Additionally, the Stability AI Developer Platform API now includes a suite of image services that leverage Stability's cutting-edge image models to offer powerful media-enhancing tools such as image generation, enhancement, and editing. The user-focused APIs simplify image generation and edition tasks by eliminating the need for prompt engineering. The image services tools are divided into four categories according to their broad function: generation, upscaling, editing (including in and outpainting), and control, an upcoming set of tools designed to produce consistent and predictable content that meets specific criteria. Integration with Stability AI's REST API guarantees immediate use, but interested users can also begin by reviewing the platform's documentation or experimenting with this notebook.

Stanhope AI completed a successful £2.3 million funding round to apply neuroscience to teaching machines how to make human-like decisions

Stanhope AI's funding round was led by the UCL Technology Fund, with participation from Creator Fund, MMC Ventures, Moonfire Ventures, Rockmount Capital, and several angel investors. Stanhope AI was founded as a University College London spinout backed by UCL Business and founded by CEO Professor Rosalyn Moran (former Deputy Director of King's Institute for Artificial Intelligence), Director Karl Friston, Professor at the UCL Queen Square Institute of Neurology and Technical Advisor Dr Biswa Sengupta (MD of AI and Cloud products at JP Morgan Chase). Stanhope AI's approach and technology are based on "agentic" AI that uses principles like Active Inference to build algorithms that continuously learn and update internal world models from real-time data. In contrast, current AI models can only make decisions on "seen" data, which is one of the main reasons they require training sessions on massive datasets that consume vast amounts of resources, including energy and processing power. Stanhope AI expects its approach will deliver truly autonomous, uncertainty-minimizing AI that enables human-like reasoning on small devices that can power applications such as delivery drones. The raised funding will empower the company to continue developing its agentic AI models and facilitate research into practical uses.

Runway announced strategic partnerships with Media.Monks and Musixmatch

The collaborative partnership with Media.Monks will bring Runway's generative AI tools and models to Media.Monks' internal teams and clients worldwide. As a digital-first marketing, advertising, and technology services company providing a wide range of content creation solutions, Media.Monks is in the ideal position to benefit from Runway's generative AI resources. Both parties expect the partnership will enhance the agency's creative and content-generation processes, broadening the variety of creative opportunities available to Media.Monks and its clients while also helping them save time and money.

Musixmatch is a music data company holding an unmatched catalog of music lyrics and metadata partly due to the contributions from a vibrant community of 80M users and 1M artists. The company is well-known for its synchronized lyrics integrations with leading music streaming platforms, which feature a unique ability to extract meaning from lyrics. With the addition of Runway's resources into its arsenal, Musixmatch plans to leverage its meaning-extracting capability to combine it with Runway's video generation solutions to deliver a new video creation assistant, which will help its artist community bring their music to life with AI-generated media aligning with their creative visions.

An international research team is trialing an AI tool that could predict side effects in patients undergoing breast cancer treatment

In its first stage, the tool was trained to predict the incidence of lymphedema (chronic arm swelling) up to three years after treatment by using data from 6,361 breast cancer patients. After training, the AI tool predicted incidence correctly for 81.6% of cases while also correctly predicting 72.9% of the cases in which patients would not develop the condition. These results yielded a 73.4% average of predictive accuracy (on paper). The physicians and researchers in the project hope the tool could be used to determine how likely a patient is to develop side effects resulting from the standard treatment protocols before they are treated. With this information in hand, medical teams could offer alternative courses of treatment or preventative measures to address the unintended side effects. The tool and the accompanying clinical trial were presented at the European Breast Cancer Conference in Milan, where it was also clarified that the research team was developing the tool to cover other common side effects, such as skin and cardiac damage. Because the project is in such an early stage, more time and evidence are needed before the tool can be considered appropriate for use in a medical setting.

Georgia state representatives are pushing a bill outlawing the use of political deep fakes using an AI deep fake video

The proposed House Bill 986 makes it a felony to broadcast or publish deceptive information within 90 days of an election, aiming at influencing the results in favor of a candidate, creating confusion regarding the election, or manipulating the result. The newly created crimes of fraudulent election interference and soliciting could be punished with two to five years in prison and fines up to $50,000. Brad Thomas, a Republican co-sponsor of the bill, and his colleagues teamed up to create a deep fake from the images and voices of the bill-opposing far-right politicians Colton Moore and Mallory Staples. The video shows both politicians endorsing the bill's passage while displaying a continuous disclaimer at the bottom of the screen that features the bill's text. The bill passed on an 8-1 vote.

Thomas' video is not the first time that deep fakes have entered the political stage in the context of the 2024 elections. An AI-generated robocall featuring the voice of Joe Biden inviting New Hampshire voters to abstain started being circulated almost as soon as the electoral season began. Experts agree that AI-generated audio is particularly risky since it obscures most contextual clues that would give away a piece of generated media as a deep fake. Still, federal regulations in the US regarding the use of AI-generated media both in politics and any other sensitive domain are practically non-existent. State lawmakers like Thomas are stepping up to fill the void, proposing and passing legislation that makes it illegal to use unlabeled AI-generated content in political campaigns within the state passing the bill. But even if policymakers have been slow to catch up to the risks of generative AI, there is still hope that legislation will continue to appear as the boundaries between free speech and fraudulent acts continue to be blurred.

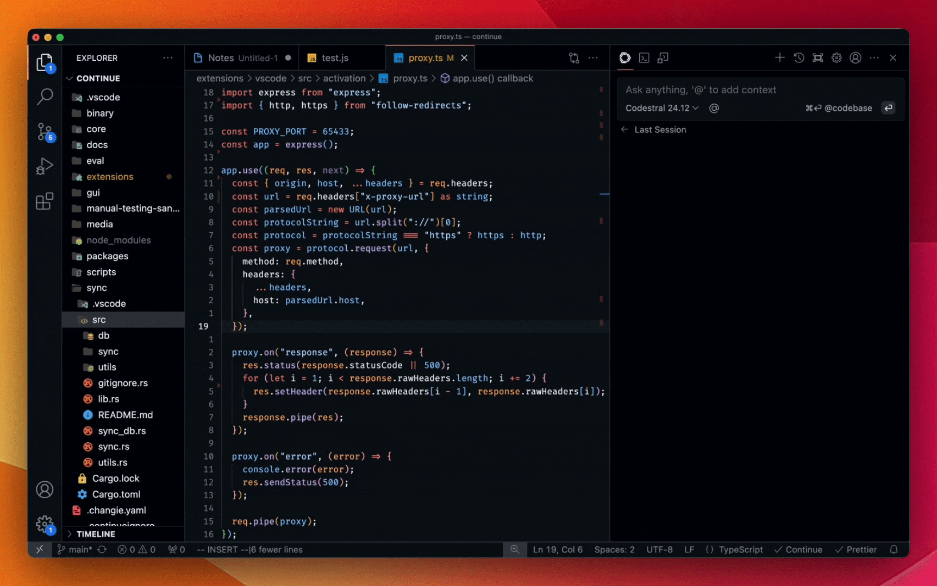

Code scanning autofix is now in public beta for GitHub Advanced Security customers.

The code scanning autofix feature is powered by GitHub Copilot and GitHub's code analysis engine, CodeQL. It covers over 90% of the JavaScript, Typescript, Java, and Python alert types and offers code suggestions to resolve over two-thirds of the detected vulnerabilities with minor to no editing. Thus, code scanning autofix helps developers significantly reduce the time and effort spent trying to keep up with their organization's "application security debt": the evergrowing number of unaddressed vulnerabilities in production repositories. To work its magic, code scanning autofix leverages CodeQL and GitHub Copilot to find vulnerabilities and deliver code fix suggestions in natural language, including an explanation for the suggestion and a preview of the code for the developer to accept, edit, or dismiss. Sometimes, suggestions also require changes to multiple files and dependencies to be added to the project. GitHub plans to add support for more languages over time, with C# and Go coming soon. Interested users can find more details in the announcement, which includes pointers to GitHub's extensive documentation.

Google was charged with a $270M fine by France's Autorité de la Concurrence

The Autorité de la Concurrence (ADLC) is France's federal administration authority for upholding the law related to sufficient market competition. Among its main tasks, the Autorité monitors market functioning and works to prevent anticompetitive practices. This is not the first time the ADLC has called out Google for abusing its dominant market position. In 2021, Google was fined for scraping and displaying headlines and snippets from French news publishers without offering fair and appropriate financial compensation. As a result of the dispute's settlement, Google entered into numerous agreements with French publishers and news agencies.

In its most recent findings, the ADLC reports that Google may have disregarded these agreements in its foundational model training practices. In particular, the findings point out that Google has failed to notify publishers and outlets that their data has been scrapped for model training and that the company has failed to provide news agencies and publishers with training opt-out mechanisms that do not entail that their websites have to disappear from Google's services altogether. Google published a lengthy blog post. There, it attempts a defense citing Article 4 of the EU Copyright Directive and the EU AI Act. However, whether the Article 4 exception applies to using news content to train AI models is still undetermined, while the AI Act has yet to be adopted.

Saudi Arabia is planning to create a $40 billion AI investment fund

Representatives of Saudi Arabia's Public Investment Fund (PIF), including PIF governor Yasir Al-Rumayyan, have been looking for potential financial partners in the US. According to the New York Times report, the PIF representatives recently met with Andreessen Horowitz executives to discuss a possible partnership between both entities, including plans for the Silicon Valley firm to set up an office in the country's capital, Riyadh. No deal has been closed yet, and people close to the matter say that Saudi Arabian officials are considering other financiers as potential partners. Once the fund, which is expected to come together in the second half of 2024, is successfully set up, Saudi Arabia will become the biggest investor in artificial intelligence worldwide. The country reportedly wants to attract several AI-focused startups, from large-scale data centers to chip makers, by citing its remarkable energy resources and funding capacity. As a measure of magnitude, the $40 billion AI fund would come out of Saudi Arabia's sovereign wealth fund, which boasts over $900 billion in assets.

Databricks has acquired Lilac, the open-source dataset observability and quantification tool

Both companies recently announced that Lilac would join Databricks to provide an end-to-end platform that enables developers to build high-quality generative AI applications. Incorporating Lilac's technology into Databricks Mosaic AI allows users to evaluate model outputs and prepare datasets for RAG, fine-tuning, and pre-training in a unified platform. Founded by Google's former employees Daniel Smilkov and Nikhil Thorat, Lilac allows users, among other things, to browse datasets with unstructured data, enrich unstructured fields with structured metadata, and create custom AI models to find and score text matching a given concept. Moreover, Lilac was focused on replacing the unscalable, manual, and labor-intensive methods that are standard in bias and toxicity evaluation processes and data preparation workflows. Seeing this, Lilac's acquisition marks the beginning of an undoubtedly brilliant future for both teams.

The Open Source Robotics Foundation (OSRF) announced the creation of the Open Source Robotics Alliance (OSRA)

OSRA is an initiative aimed at reinforcing the governance of the OSRF open-source robotics software projects and ensuring the healthy development of the Robot Operating System (ROS) Suite community. The OSRA will follow other successful open-source project foundations and adopt a mixed membership and meritocratic model. The OSRF Board will be fully responsible for the OSRA, which oversees the different Committees and Groups through its Technical Governance Committee (TGC). OSRA's early supporters include Platinum members NVIDIA, Intrinsic, and Qualcomm Technologies. Additionally, nine other inaugural members are distributed as follows: Gold members Apex.ai and Zettascale; Silver members Clearpath Robotics, Ekumen, eProsima, and PickNik; and Associate member Silicon Valley Robotics. OSRA will also count on the backing from Initial Supporting Organizations Canonical and Open Navigation. Details for the currently open membership applications can be found at https://www.osralliance.org.

Cleric will be the world's first AI site reliability engineer

Cleric is built based on the premise that production environments require a closed-loop infrastructure: rather than focusing on extending existing tools with AI only to have humans operate them eventually, production environments need AI operators so humans are relieved from repetitive, time-consuming diagnostics processes. Cleric reliably triages and sources alerts from production environments. Moreover, although it was trained in Kubernetes-based scenarios, it is also built with the capability to adapt to a wide range of enterprise contexts. Cleric's reasoning capabilities enable it to swiftly work through various issues by generating hypotheses from context, performing queries for evidence collection, and processing the gathered information into a full-blown diagnostic. Since Cleric prioritizes safety, its current iteration only handles read access to private data, delivering suggestions without performing them. Early access to Cleric is available to software engineering teams with on-call responsibilities on a per-contact basis. Moreover, its development has been recently boosted by a successful $4.3 million seed funding round led by Zetta Venture Partners and participating angel investors from Google Cloud, Sysdig, Tecton, and Neo4J.

Foundry announced it secured $80 million in Seed and Series A to make the world's computing power universally accessible

Foundry conceives the infrastructure problem in AI as a root node problem comparable to the invention of the lightbulb—to have the world-changing impact it had, the invention of the lightbulb required additional layers of abstraction to get it to the point where people could turn on a switch to get light. Just as bringing electricity to the household required building brand-new infrastructure from the ground up, Foundry's mission begins with building a new public cloud platform optimized for AI and ML workloads. The current public infrastructure is oriented towards web development and was built with CPUs as the core components. Trying to run AI and ML workloads in a web-oriented infrastructure only aggravates the problems started by the well-known GPU shortage issue since whichever GPUs there are, they end up underutilized, which drives the final price for computing power access up. Foundry's approach was developed by "a small but ferocious cross-disciplinary team," which has reimagined the ML/AI infrastructure to deliver GPU instances with top price-performance metrics. Foundry's approach to ML infrastructure prioritizes the availability of top-tier accelerators, elasticity to scale, optimal price/performance ratios, simplicity through abstraction, and security/resiliency.

Imageryst has raised 700,000 EUR to ensure widespread access to satellite data

Imageryst's funding round was made possible by prominent backers, including Hearst Lab, Backfund, Core Angels Barcelona, Urriellu Ventures, and angel investors from the SeedRocket ecosystem, and by a 200,000 EUR loan granted by Enisa in the Digital Entrepreneurs line. Imageryst is working on a SaaS platform that leverages AI and remote sensing for fast and accurate geolocated data extraction from satellite imagery. The valuable extracted data can then inform decisions focused on renewable energy. The funding will enable the startup to consolidate its domestic position, expand internationally, and explore new use cases to innovate in the geospatial data field.

Superlinked raised a $9.5M seed round to help the industry run vector-powered systems

Index Ventures and Theory Ventures, with participation from 20Sales, Firestreak, tech executives, and existing investors, led the $9.5 million seed funding round. Superlinked originated as an assistant to build real-time personalized software that pivoted into using "open-source and proprietary models to turn complex product listings, content, user behavior, and other types of data into vector embeddings." Superlinked also built a mechanism that enables developers to query the data effectively while incorporating backfills and batch query evaluation into its product to address common challenges data engineers face when delivering their systems to production. Superlinked calls the three components of its product "the vector computer." In parallel to its funding announcement, Superlinked also stated that it would open-source the interactive components of its product to empower the industry when running vector-powered systems that combine structured and unstructured data.

CodeRabbit has acquired FluxNinja

FluxNinja is the startup behind Aperture, one of the products that enables it to provide a platform for building scalable generative AI applications. Aperture delivers critical capabilities like rate limiting, caching, and request prioritization essential for CodeRabbit's reliable and cost-effective AI workflows. Although Aperture was geared towards solving a different issue, CodeRabbit found that FluxNinja's underlying technology and team's expertise were the perfect foundation for building an AI platform that tackled challenges around prompt rendering, validation & quality checks, and observability. The FluxNinja acquisition empowers CodeRabbit to face the challenge of developing a platform that supports real-world AI workflows head-on.

Quantistry secured 3M EUR to develop its quantum-and-AI-powered platform for chemical and material R&D

Ananda Impact Ventures led Quantistry's funding round, which featured contributions from notable co-investors, Chemovator, the business incubator of BASF, IBB Ventures, and a Family Office. The funding will enable Quantistry to continue developing its cloud-native simulation platform aimed at transforming chemical R&D and the process of discovering new materials. Quantistry's platform combines quantum technologies, physics-based simulations, and machine learning to address current challenges in industrial R&D, such as high costs, fragmented expertise, and slow innovation. The platform leverages quantum-based simulations, multiscale modeling, and AI-driven insights to accelerate the optimization, discovery, and design of novel materials through sustainable strategies. Quantistry's technology will significantly and positively impact the environmental footprint of some industries by enabling the swift development of sustainable and efficient materials for applications such as next-gen batteries, polymers, alloys, and carbon capture.

Comments