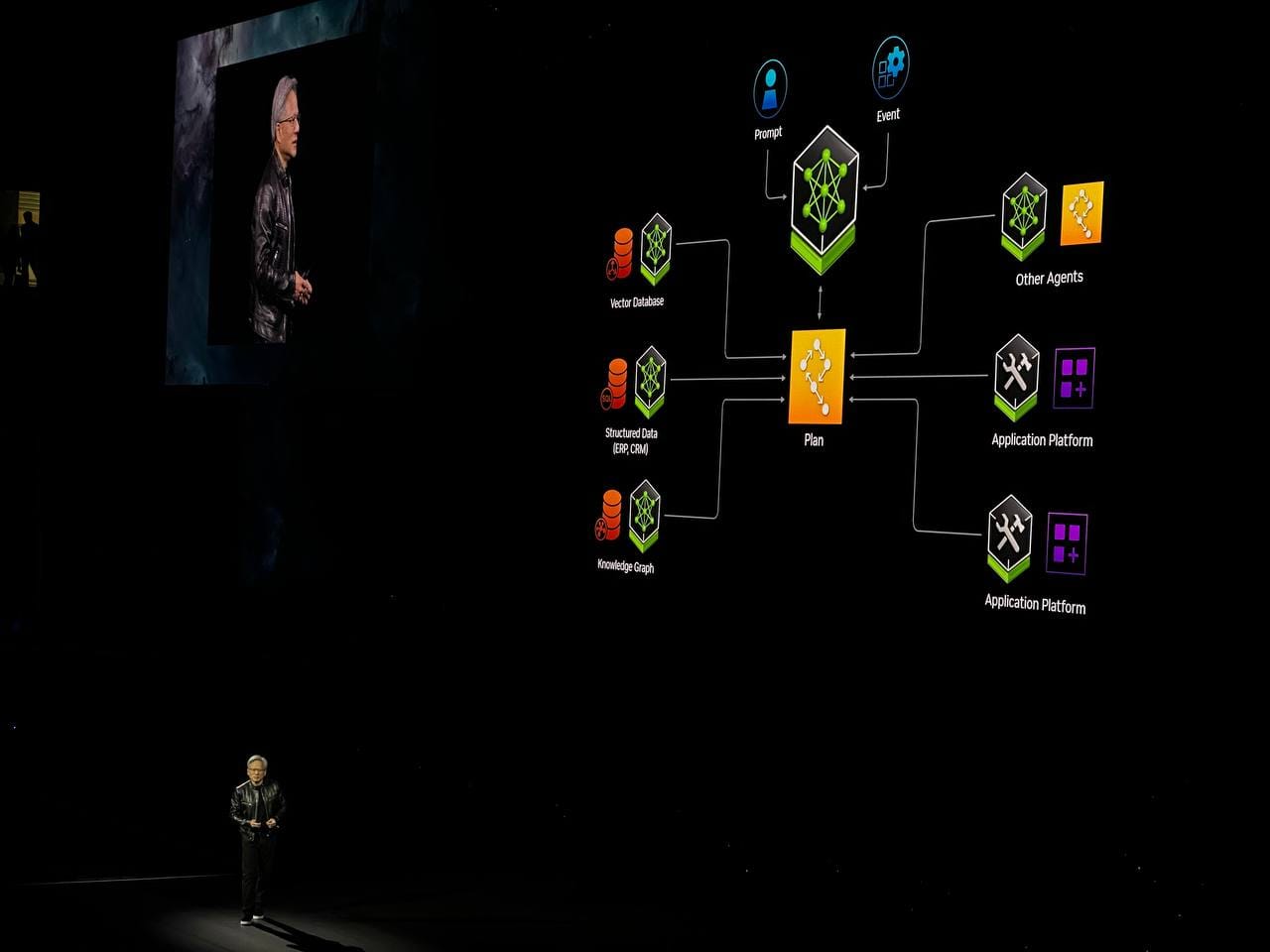

With NVIDIA's AI microservices, organizations can create and deploy generative AI copilots privately and securely

The newly launched NVIDIA microservices enable organizations to build and deploy AI applications locally while preserving control of their data. NIM supplies high-performing containers for proprietary and open model deployment while CUDA-X accelerates the production process.

Today, NVIDIA announced the launch of dozens of enterprise-grade AI microservices enabling organizations to privately and securely build and deploy custom applications on their platforms. Built on top of the NVIDIA CUDA® platform, the new microservices include the NVIDIA NIM™ microservices for optimized inference on the most popular models in the NVIDIA AI ecosystem. Additionally, the accelerated software development kits, libraries, and tools constitute the NVIDIA CUDA-X™ microservices for retrieval-augmented generation (RAG), guardrails, data processing, and more. Lastly, NVIDIA also announced the launch of several healthcare-specific NIM and CUDA-X microservices.

The newest layer in the NVIDIA full-stack computing platform connects the different elements in NVIDIA's AI ecosystem —model developers, platform providers, and enterprises— with a standardized method to run applications based on AI models optimized to run in NVIDIA CUDA-optimized GPUs across clouds, data centers, and workstations. NVIDIA's microservices currently feature limited availability and will be accessed first by industry-leading enterprises such as Adobe, Cadence, CrowdStrike, Getty Images, SAP, ServiceNow, and Shutterstock.

The NVIDIA NIM microservices provide containers powered by NVIDIA's inference software. They supply industry-standard APIs for several domains, such as language, speech, and drug discovery, so developers can quickly build and locally host easily scalable applications that securely leverage their proprietary data. NIM microservices contribute fast and high-performing containers for the deployment of popular proprietary and open-source models. NIM also includes microservices from select partners and integrates with popular frameworks such as Deepset, LangChain, and LlamaIndex.

Similarly, the CUDA-X microservices provide end-to-end building blocks to accelerate the AI application production process. For instance, NVIDIA Riva provides customizable speech and translation AI, NVIDIA cuOpt™ supplies routing optimization, and NVIDIA Earth-2 delivers high-resolution climate and weather simulations. Moreover, the NeM0 Retriever microservices assist developers in connecting proprietary data from their organization to generate accurate, helpful, and context-relevant responses from their chatbots, copilots, and AI-powered productivity tools. Upcoming NeMo microservices will cover further aspects of data management, including the NeMo Curator for building clean datasets, the NVIDIA NeMo Customizer for fine-tuning LLMs with domain-specific data, and the NVIDIA NeMo Evaluator for analyzing AI model performance.

Data, infrastructure, and compute providers are already working with the NVIDIA CUDA-X microservices. Moreover, enterprises can deploy NVIDIA microservices across their chosen cloud infrastructure provider, including Amazon Web Services (AWS), Google Cloud, Azure, and Oracle Cloud Infrastructure. The microservices are also supported on several certified systems, including servers and workstations from NVIDIA's partners. They will also be supported by infrastructure software platforms and AI and MLOps partners, including Abridge, Anyscale, Dataiku, Scale.ai OctoAI and Weights & Biases.

Developers interested in trying the microservices out can access ai.nvidia.com free of charge. Further details on the NIM and CUDA-X microservices can be found in today's keynote.