Andreessen Horowitz published a report on the shift in attitudes toward AI adoption and spending

Andreessen Horowitz Growth team partners Sarah Wang and Shangda Xu interviewed several enterprise leaders and surveyed 70 more to learn more about the attitudes toward AI adoption and spending. They have published their findings in a compelling report, which we distilled into this piece.

The report was inspired by the fact that, while generative AI became an instant success among consumers in 2023, the enterprise and business context wasn't as keen to jump into the generative AI bandwagon. The report is backed by interviews with several Fortune 500 and top enterprise leaders and a survey in which 70 of these leaders participated. However, the opposite seems to have happened, as enterprise customers seemed stuck with a limited number of use cases, and product offerings became little more than "GPT-wrappers" that slapped a generic user interface on top of a fine-tuned proprietary model.

A little over six months after ChatGPT took the consumer world by storm, Andreessen Horowitz Growth team partners Sarah Wang and Shangda Xu interviewed several Fortune 500 and top enterprise leaders and surveyed 70 more to find whether and how the attitudes toward AI adoption and spending had changed. Somewhat unexpectedly, they found out that even though some of the skepticism surrounding the potential for generative AI in the workplace remains, team leaders and their organizations are increasing the budget they allocate for AI-related spending, increasing their reliance on open-source LLMs, and diversifying the number of use cases they transition to production from experimentation.

Consequently, the authors propose that the current climate surrounding business will favor startups that manage to take enterprises' initiatives and potential roadblocks into consideration while transitioning from the services-heavy "wrapper" model to an approach that prioritizes building scalable products that, among other things, will reflect the concern with expanding the range of use cases, avoid vendor lock, and secure private data and intellectual property. The authors develop their findings through a 16-point outline that we sum up as follows:

- As mentioned, enterprises are increasing their AI spend, sometimes despite lingering reservations. Regardless, most of the surveyed leaders reported positive results from their early experiments, which have motivated a 2–5x increase in their planned AI spending. This would take the $7 million average from 2023 to approximately $18 million in 2024.

- Another notable shift concerns the origin of AI spending money. In 2024, enterprises' AI investments are switching from 'innovation' budgets and other one-time pools to recurring line items (from both IT and non-IT allocations). Some exceptional cases are even deploying their budget from headcount savings. One of the surveyed companies stated that their $6 savings per call managed by their AI-powered customer service, a remarkable cost savings of around 90%, played an essential role in the company's decision to increase AI investment eightfold.

- Somewhat unexpectedly, there is still no definite measure for ROI. Increased productivity continues to be the most popular measure, followed by NPS and customer satisfaction. However, teams are desperate to find more definite measures, including revenue generation, savings, and accuracy gains. While leaders figure out the best answer to the ROI question, some temporarily rely on the word of their employees.

- Most generative AI talent implementing and maintaining enterprises' solutions is not in-house but part of a package deal offered by the AI provider. Infrastructure deployment and maintenance requires hard-to-find, specialized talent. This is reflected in the fact that implementation was one of the key AI spending areas in 2023 and often the one with the most substantial budget allocation. The report predicts an area of opportunity for those startups whose product includes specialized tooling that simplifies the workloads of in-house talent.

- OpenAI consolidated itself as one of the strongest players in the field soon enough, which was mirrored in the fact that most enterprises were experimenting with or adopting OpenAI as their preferred provider. With the advent of a very healthy open-source ecosystem, leaders cite customizability, avoiding vendor lock-in, and staying on top of the latest advancements as reasons to diversify rather than replace their chosen closed-source LLM providers.

- Jointly with the former point, almost half of the survey respondents admitted to (strongly) preferring open-source models. Moreover, nearly 60% of the respondents claimed they were interested in looking into open-source alternatives whenever their performance closely matched that of proprietary models. Some respondents reportedly work towards a 50/50 split between open and closed-source models.

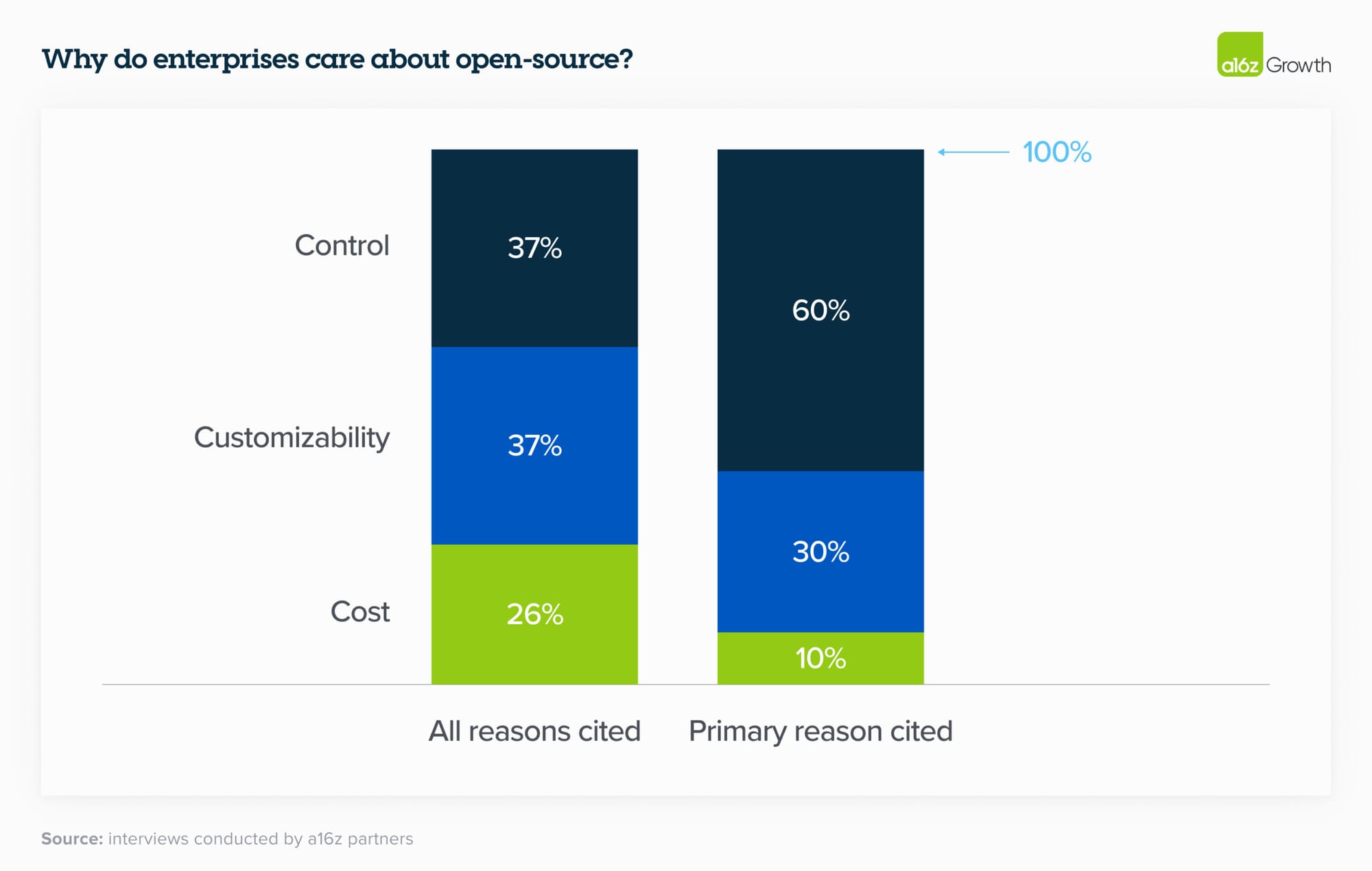

- Pleasantly, it turns out that cost is not the most relevant factor when deciding to embrace open-source solutions. Concerns about cost rank below control-related issues (involving data safety/privacy and observability) and customizability. Regardless, the belief that the value created by AI will outweigh its cost still prevails.

- As expected, the desire for control is rooted in the discomfort leaders still share about sharing sensitive data with closed-source models. While choosing open-source models is one way to address the issue, some leaders also favor models with virtual private cloud (VPC) integrations.

- Fine-tuning and RAG are vastly favored over model-building from scratch. Some leaders have even chosen to rely exclusively on RAG rather than building custom models from scratch.

- Enterprises still prefer sharing sensitive information with their chosen cloud service provider (CSP) rather than with proprietary model providers. This has led to a high correlation between CSP and the preferred model, indicating that companies still buy at least some AI services through their existing CSP.

- Early-to-market features are still a dealbreaker. Several surveyed leaders cited Anthropic's 200K context window as a reason for adoption, and similarly for Cohere's simplified fine-tuning offerings.

- However, there is widespread agreement that model performance is converging. Regardless of how a model performs according to external benchmarks, enterprise leaders evaluate model performance by testing against internal benchmarks. Many leaders find that adequately fine-tuned open-source models perform similarly to closed-source ones. This feeds into the prioritization of customizability as a reason to choose open-source models in the first place.

- The most common strategy to address vendor lock-in has been to design applications so models can be switched with little more than an API change. Another reliable strategy involves keeping a "model garden" that enables the deployment of models to apps as needed. This kind of optimization reduces the risk of vendor lock-in and enables teams to stay on top of the latest advancements.

- Partly motivated by the "GPT wrapper" issue, enterprises strongly prefer to build applications in-house. However, this seems to be more a result of the current AI applications market than of a set belief concerning the superiority of building in-house. Many leaders who prefer in-house building to develop highly customized solutions to well-known use cases and even to experiment with more novel ones have expressed their optimism about the future, stating their willingness to shift from in-house apps to using "the best out there."

- Leaders remain more confident about experimenting with novel internal use cases instead of external ones. This differentiation is also the product of concerns about hallucinations, safety, and public relations issues. Autonomous internal use cases still outweigh sensitive internal use cases requiring human oversight and customer-facing external use cases. This, too, is an area of opportunity for startups since perfecting the latter two cases could lead to widespread product adoption.

- The report closes with a very optimistic, albeit not unreasonable, $5B projected spend on model APIs and fine-tuning by the end of 2024, more than twice the $1.5–2B run-rate revenue of 2023. Most importantly, the authors predict that deals will be accelerated and increase in size. Although the report focuses on the foundation model layer, the authors expect this to be true for the entire generative AI stack. As a result, startups concentrating on building tools that help with fine-tuning, model serving, application building, and purpose-built AI native applications will increasingly find themselves at an inflection point, bringing previously unheard-of opportunities.

The full report is a compelling and unmissable read, available here.