Anthropic's Claude 2.1 is now available via API and chat experience

Claude 2.1 is now available over API in Anthropic's Console. The model is also powering the claude.ai chat experience. Alongside Claude's newest features, Anthropic also announced a cost-efficiency-increasing price adjustment across its models.

Claude 2.1, Anthropic's latest model, is now available over API in Anthropic's Console and also powering the chat experience at claude.ai. The new model features several upgrades, such as an extended context window, reductions in the hallucination rate compared to Claude 2.0, system prompts, and tool use (still in beta). Alongside the Claude 2.1 release, Anthropic also announced a pricing adjustment that will increase cost efficiency for its customers across all models.

As for the extended context window, Claude 2.1 supports an industry-leading 200K tokens. The number translates to about 150,000 words or 500 pages of text. Whether the data is shaped as financial reports, entire codebases, or substantial literary works such as The Iliad or The Odyssey, Claude 2.1 can perform various actions on the input, such as summarization, trend forecasting, compare and contrast, and more. Anthropic expects its customer base to understand that Claude will likely need several minutes to complete tasks that would take a person hours to perform. The company also expects a decrease in latency once the technology progresses. It is important to note that the extended context window is currently restricted to Claude Pro users.

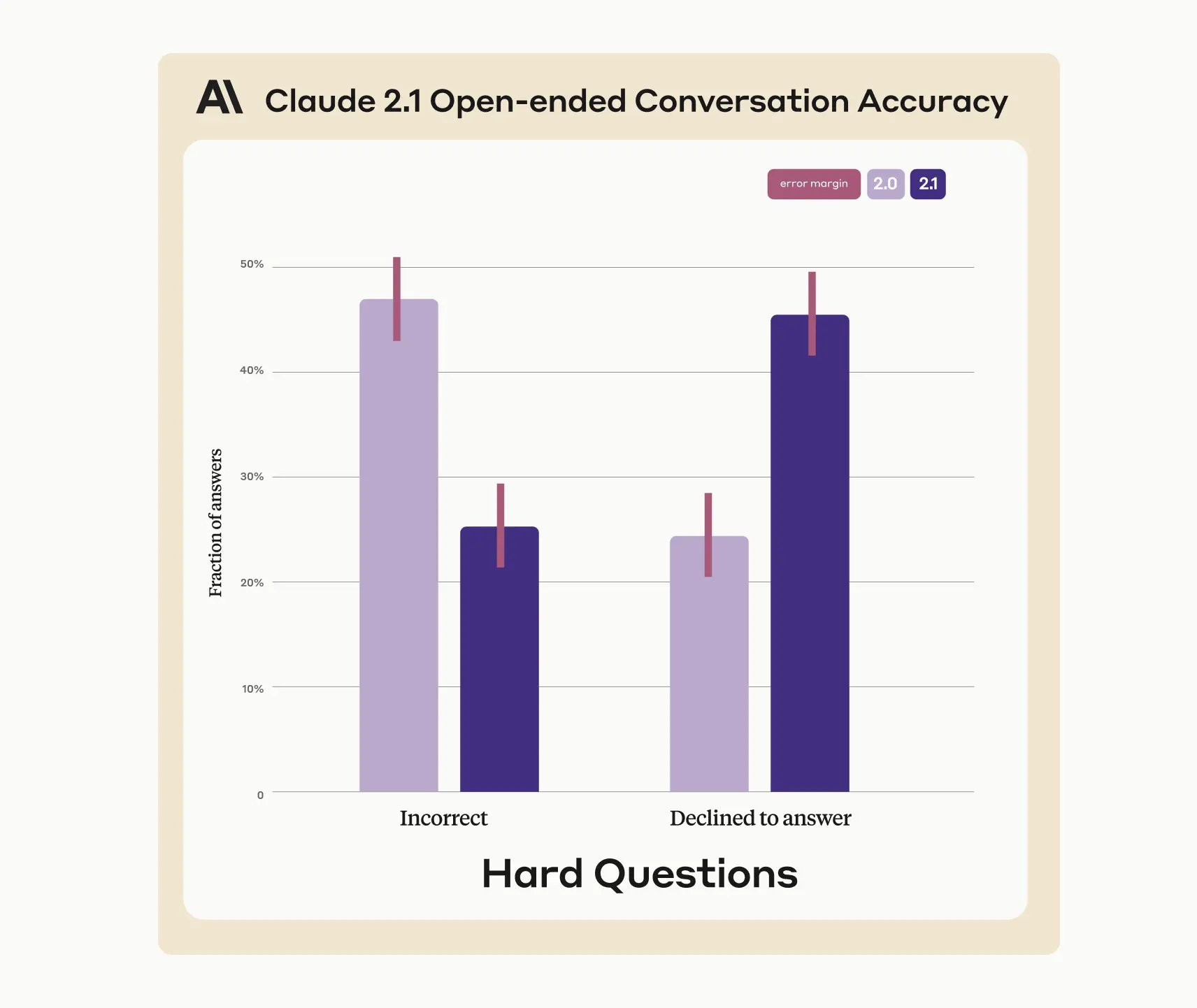

Claude 2.1 also boasts a 2x reduction in false statements compared to its predecessor, Claude 2.0. The models were tested against a list of complex factual questions using a rubric that discerns between outright falsities and claims of ignorance. Claude 2.1 was more likely to refrain from answering than giving false information. The gains in honesty are also related to Claude 2.1's improvements in tasks such as summarization and comprehension. The increased accuracy of Claude 2.1 yielded a 30% reduction in incorrect answers based on a source document and a 3-4x lower rate of wrong conclusions regarding whether a given document supports a particular claim.

Tool use for Claude is still in beta, but the feature will allow Claude to integrate with users' workflows, products, and APIs. Users will be able to define which tools are available to Claude, and the model will choose the tool required to perform a task and will carry out the needed action on behalf of the user. Some example cases of tool use include using a calculator to perform complex numerical operations, translating natural language requests into API calls, Answering questions by searching a database or using a web search API, and others.

On the developer side, Anthropic is working on simplifying its Console product while making the prompt testing process smoother. To this end, Console now includes Workbench, a product that lets developers iterate on prompts and access settings to optimize Claude's behavior. Workbench supports the creation of multiple prompts that can be assigned to different projects, with revisions to prompts saved automatically. Developers can also create code snippets based on a prompt and use them in Anthropic's SDKs.

Finally, the system prompts provide relevant context that improves Claude's performance, especially in role-playing conversations. The context contained in the system prompts may include instructions, role and tone prompting, style and creative guidance, knowledge or data from external sources, rules, guardrails, and verification standards (such as thinking out loud). System prompts enhance the model's performance, enable it to readily stay in character, and increase Claude's instruction-following capabilities. However, Anthropic does warn that system prompts do not necessarily make a prompt jailbreak or leak-proof.

With this release, it is clear that Anthropic does place a lot of value on the safety and performance of its products. Claude 2.1 is a prime example of how the company stays true to its mission "to build the safest and most technically sophisticated AI systems in the industry."