Data Phoenix Digest - ISSUE 18.2023

How to Instruction Tune a Base Language Model; Transforming MLOps at DoorDash with ML Workbench; RAG with LangChain, Amazon SageMaker JumpStart, and MongoDB Atlas semantic search; LMQL — SQL for Language Models; Tracking Anything in High Quality; PMC-LLaMA, Text2Performer, and more.

Welcome to this week's edition of Data Phoenix Digest! This newsletter keeps you up-to-date on the news in our community and summarizes the top research papers, articles, and news, to keep you track of trends in the Data & AI world!

Be active in our community and join our Slack to discuss the latest news of our community, top research papers, articles, events, jobs, and more...

Click here for details.

Data Phoenix's upcoming webinars:

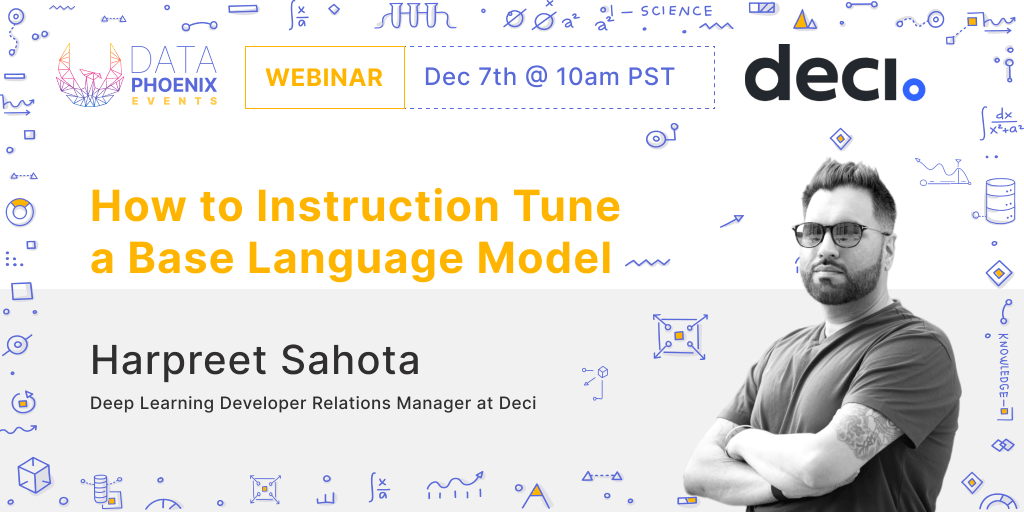

How to Instruction Tune a Base Language Model

While LLMs have showcased exceptional language understanding, tailoring them for specific tasks can pose a challenge. This webinar delves into the nuances of supervised fine-tuning, instruction tuning, and the powerful techniques that bridge the gap between model objectives and user-specific requirements.

Here's what we'll cover:

- Specialized Fine-Tuning: Adapt LLMs for niche tasks using labeled data.

- Introduction to Instruction Tuning: Enhance LLM capabilities and controllability.

- Dataset Preparation: Format datasets for effective instruction tuning.

- BitsAndBytes & Model Quantization: Optimize memory and speed with the BitsAndBytes library.

- PEFT & LoRA: Understand the benefits of the PEFT library from HuggingFace and the role of LoRA in fine-tuning.

- TRL Library Overview: Delve into the TRL (Transformers Reinforcement Learning) library's functionalities.

- SFTTrainer Explained: Navigate the SFTTrainer class by TRL for efficient supervised fine-tuning.

Speaker:

Harpreet Sahota is a Data Scientist turned Generative AI practitioner who loves to learn, hack, and share what he figures out along the way. He has graduate degrees in math and statistics and has worked as an actuary biostatistician. He has built two data science teams from scratch in his data science career. Harpreet has been in DevRel for the last couple of years, focusing more on product and developer experience.

- Leveraging open-source LLMs for production

Andrey Cheptsov (CEO & Founder of dstack) / November 30 - GPT on a Leash: Evaluating LLM-based Apps & Mitigating Their Risks

Philip Tannor (Co-founder and CEO of Deepchecks) / December 12 - Democratizing AI Deployment

Daniel Lenton (CEO at Unify) / December 14 - A Whirlwind Tour of Machine Learning Monitoring Techniques

Ramon Perez (Developer Advocate at Seldon) / December 19

Summary of the top articles and papers

Articles

Transforming MLOps at DoorDash with Machine Learning Workbench

In this article, the authors will explain how DoorDash has accelerated ML development velocity by constructing a streamlined environment for automating ML workflows. They also shed light on how they drove value using a user-centered approach while building this internal tool.

Boost inference performance for LLMs with new Amazon SageMaker containers

In this article, the authors showed how you can use SageMaker LMI DLCs to optimize LLMs for your business use case and achieve price-performance benefits. Using the new version of Amazon SageMaker LMI TensorRT-LLM DLC you can reduce latency by 33% on average and improve throughput by 60% on average for Llama2-70B, Falcon-40B, and CodeLlama-34B models, compared to the previous version.

How to Visualize Deep Learning Models

The author will explore a wide range of deep learning visualizations in this article and discuss their applicability. The author will share many practical examples and point to libraries and in-depth tutorials for individual methods. Also, you will find a Colab Notebook with examples of many of the techniques discussed in this article.

Retrieval-Augmented Generation with LangChain, Amazon SageMaker JumpStart, and MongoDB Atlas semantic search

In this article, the authors showed how to create a simple bot that uses MongoDB Atlas semantic search and integrates with a model from SageMaker JumpStart. This bot allows you to quickly prototype user interaction with different LLMs in SageMaker Jumpstart while pairing them with the context originating in MongoDB Atlas.

LMQL — SQL for Language Models

In this article, the author will discuss an LMP (Language Model Programming) concept that allows you to mix prompts in natural language and scripting instructions. He will show how to use it for sentiment analysis tasks and get decent results using local open-source models.

Papers & projects

Align your Latents: High-Resolution Video Synthesis with Latent Diffusion Models

In this paper, the authors apply the Latent Diffusion Models (LDMs) paradigm to high-resolution video generation. The focus is on two real-world applications: Simulation of in-the-wild driving data and creative content creation with text-to-video modeling. The first results for personalized text-to-video generation are presented.

Delta Denoising Score

Delta Denoising Score (DDS) is a novel scoring function for text-based image editing that guides minimal modifications of an input image towards the content described in a prompt. DDS uses the rich generative prior of text-to-image diffusion and can be used as a loss term in an optimization problem to steer an image to a desired direction dictated by a text.

Text2Performer: Text-Driven Human Video Generation

Text2Performer is a text-driven human video generation technique that can efficiently generate vivid human videos with articulated motions from texts. Extensive experiments demonstrate that Text2Performer generates high-quality human videos (up to 512 × 256 resolution) with diverse appearances and flexible motions.

PMC-LLaMA: Towards Building Open-source Language Models for Medicine

PMC-LLaMA is a powerful, open-source language model designed for medicine applications. The paper describes the procedure for how it was built and can be used in practice. The model exhibits superior performance, even surpassing ChatGPT.

Tracking Anything in High Quality

HQTrack is a framework for High Quality Tracking anything in videos. HQTrack mainly consists of a video multi-object segmenter (VMOS) and a mask refiner (MR). HQTrack ranks 2nd place in the Visual Object Tracking and Segmentation (VOTS2023) challenge.

DataPhoenix is free today. Do you enjoy our digests and webinars? Value our AI coverage? Your support as a paid subscriber helps us continue our mission of delivering top-notch AI insights. Join us as a paid subscriber in shaping the future of AI with the DataPhoenix community.