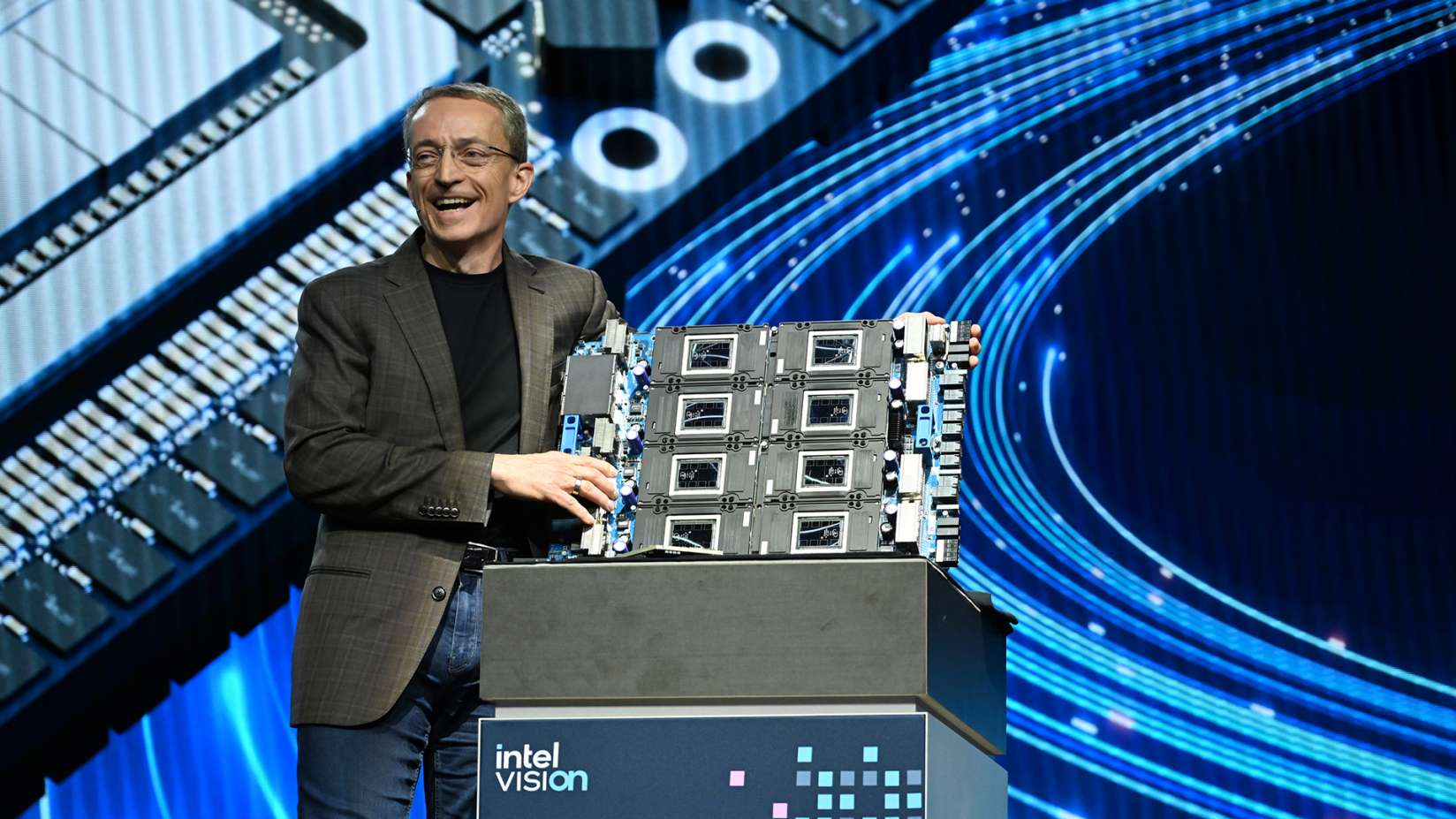

Intel is coming for NVIDIA's H100 with Gaudi 3 release

Intel unveiled the Gaudi 3 AI accelerator at its Intel Vision event in Phoenix Arizona this Tuesday. Results show that it requires shorter training, performs significantly better at Llama and Falcon models inference, and is more energy efficient than NVIDIA's H100.

Intel recently publicized the technical features of its next-generation AI accelerator, Gaudi 3. After submitting Llama-2-70B and Stable Diffusion XL results to the latest MLPerf report, Intel can claim that the Gaudi 2 chip is the only benchmarked alternative to NVIDIA. However, considering raw power, Gaudi 2 delivers slightly less than half of NVIDIA's H100 for Stable Diffusion XL inference, and closer to one-third for Llama-2-70B. Intel has since calculated its chip's performance per dollar, and claims that Gaidi 2 beats NVIDIA's H100 by offering about 25 percent more performance per dollar for Stable Diffusion XL inference; for Llama-2, it is about equal or a 21% difference, depending on the mode the measurements are carried out at. Regardless, the shadow of NVIDIA's recent announcement of the Blackwell architecture still looms heavy.

Gaudi 3 features two identical silicone dies joined by a high-bandwidth connection surrounded by four matrix multiplication engines and 32 tensor processor cores. Its components provide twice the amount of the Gaudi 2 AI compute performance using 8-bit floating-point infrastructure, or four times as much performance for BFloat 16 computations. Since the Gaudi 3 is geared toward LLM performance, Intel also reports a 40 percent reduction in training time for GPT-3 compared to the H100 and even better results for the smaller Llama 2 models. The inferencing results were not as dramatic, with Gaudi 3 delivering 95 to 170% of the H100 performance for two versions of Llama 2, an advantage vastly diminished when Gaudi 3 was compared with NVIDIA's H200. Regardless, Intel still has the upper hand in energy efficiency, as reportedly, Gaudi 3 performs 220% better than H100 on Llama 2, and 230% better on Falcon-180B.