NVIDIA at Computex 2024: NVIDIA NIM unlocks generative AI for millions of developers

NVIDIA NIM provides optimized AI models as containers, enabling millions of developers worldwide to easily deploy generative AI capabilities like copilots and chatbots within their applications in minutes. NIM also allows enterprises to maximize their infrastructure investments.

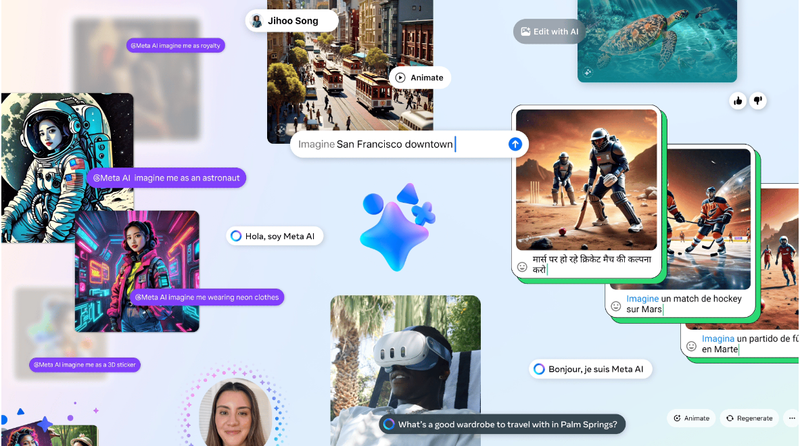

NVIDIA announced the general availability of NVIDIA NIM, a set of inference microservices that provide optimized AI models as containers that can be deployed practically anywhere. With this groundbreaking development, 28 million developers worldwide can download NVIDIA NIM to seamlessly deploy and integrate generative AI capabilities within their applications in minutes. The NIM inference microservices provide a simple, standardized way in which developers can leverage multiple models to add generative capabilities to their applications across text, images, video, and speech. NIM also maximizes infrastructure investments, by boosting efficiency to increase the responses generated using the same infrastructure.

More than 40 models, including Gemma, Meta Llama 3, Microsoft Phi-3, Mistral Large, Mixtral 8x22B, and Snowflake Arctic are already available as NIM endpoints. Additionally, Meta Llama 3 NIM microservices are accessible at Hugging Face, letting users access and run the Llama 3 NIM through the Hugging Face Inference Endpoints. NIM opens up nearly endless possibilities: enterprises can leverage the NIM microservices to run text, image, video, speech, and digital human generation.

The digital biology-specific NVIDIA BioNeMo™ NIM microservices empower researchers to build novel protein structures and accelerate drug discovery. Healthcare organizations can leverage NIM to integrate generative AI into applications including surgical planning, digital assistants, drug discovery, and clinical trial optimization. Finally, the recently launched NVIDIA ACE NIM microservices allow developers to build and operate interactive digital humans for customer service, telehealth, education, gaming, and entertainment.

NVIDIA's technology partners, industry-leading platform providers, AI tools, MLOps partners, global system integrators, and service delivery partners are all integrating or deploying NVIDIA NIM to power generative AI inference to enhance customer experience, or are integrating the inference microservices into their offerings to simplify the AI development process even further. NIM-enabled applications can be run on-premises, including on NVIDIA-Certified Systems, and the most popular cloud providers.

NVIDIA NIM microservices are available without cost for developers at ai.nvidia.com, while enterprises and larger organizations can deploy NIM-enabled applications with NVIDIA AI Enterprise. Soon, NVIDIA Developer Program members will have the opportunity to leverage NIM for research and testing purposes.

inference microservices