Segmind reveals a groundbreaking text-to-image open-source model

Segmind, a platform specializing in open-source serverless APIs for generative models, announced the release of its distillation of SDXL 1.0. SSD-1B is half the size and 60% faster than its base model. It can be fine-tuned to custom specifications and called using Segmind's serverless API.

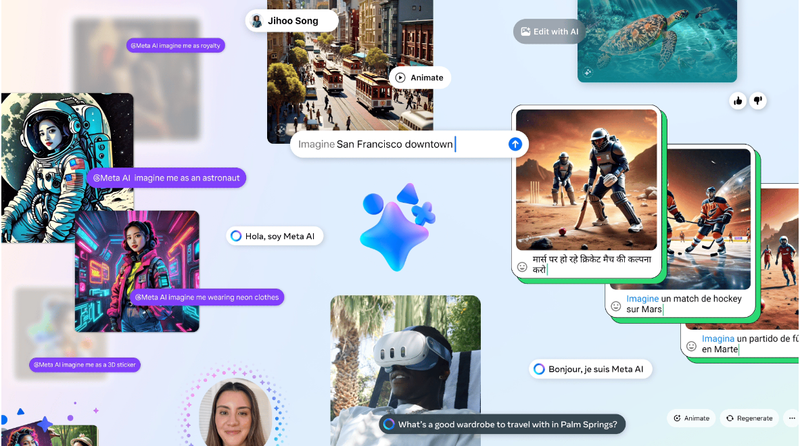

Segmind, a platform specializing in open-source serverless APIs for generative models, has just announced the release of its distillation of Stability AI's SDXL 1.0. The model, known as SSD-1B, is the most recent addition to the 'Segmind Distilled Stable Diffusion' series. The previous releases in the series, SD-Small, and SD-Tiny, are distillations of Stable Diffusion 1.5 that maintain performance while using 35% and 55% fewer parameters than the base model, respectively. With SSD-1B, Segmind successfully distilled SDXL to half its size (1.3 B parameters), delivering the same performance with an impressive 60% increase in speed. The size reduction was achieved by removing 40 transformer blocks and 1 Resnet block. Segmind's announcement offers a more detailed overview of the performance and improvements delivered by SSD-1B in comparison to SDXL.

The knowledge distillation strategy used when training SSD-1B consisted of condensing the teachings of SDXL 1.0, ZavyChromaXL, and JuggernautXL. By combining the strengths of these models, SSD-1B is capable of producing outstanding results. Training data also included Midjourney and GRIT scrape data. The diversity of sources accounts for the varied spectrum of images SSD-1B can generate based on text prompts. SSD-1B can be customized further by fine-tuning the model on private data. To this end, Segmind has open-sourced its code and stated that SSD-1B can be used directly with the HuggingFace Diffusers library training scripts.

For all its virtues, the model still has some limitations acknowledged by its creators. SSD-1B has trouble depicting photo-realism, especially when dealing with human subjects. Other areas for improvement include the incorporation of clear text and the preservation of fidelity of complex compositions. The diversity of sources used while training SSD-1B is also intended to mitigate some of the prevalent biases in the field of generative AI. However, developers recognize that even this is far from a definitive solution to the problem.

As expected, one of the easiest ways to get started with SSD-1B is via Segmind's serverless API. Their offering starts with 100 free API calls daily. Additional calls are priced according to the computing resources required per model, considering output size and steps. SDXL calls, for instance, start at $0.01 each, while Stable Diffusion img2img pricing starts at $0.0048 per call. Subscription and enterprise pricing are available on a per-request basis. Training is also available and priced by GPU minute.