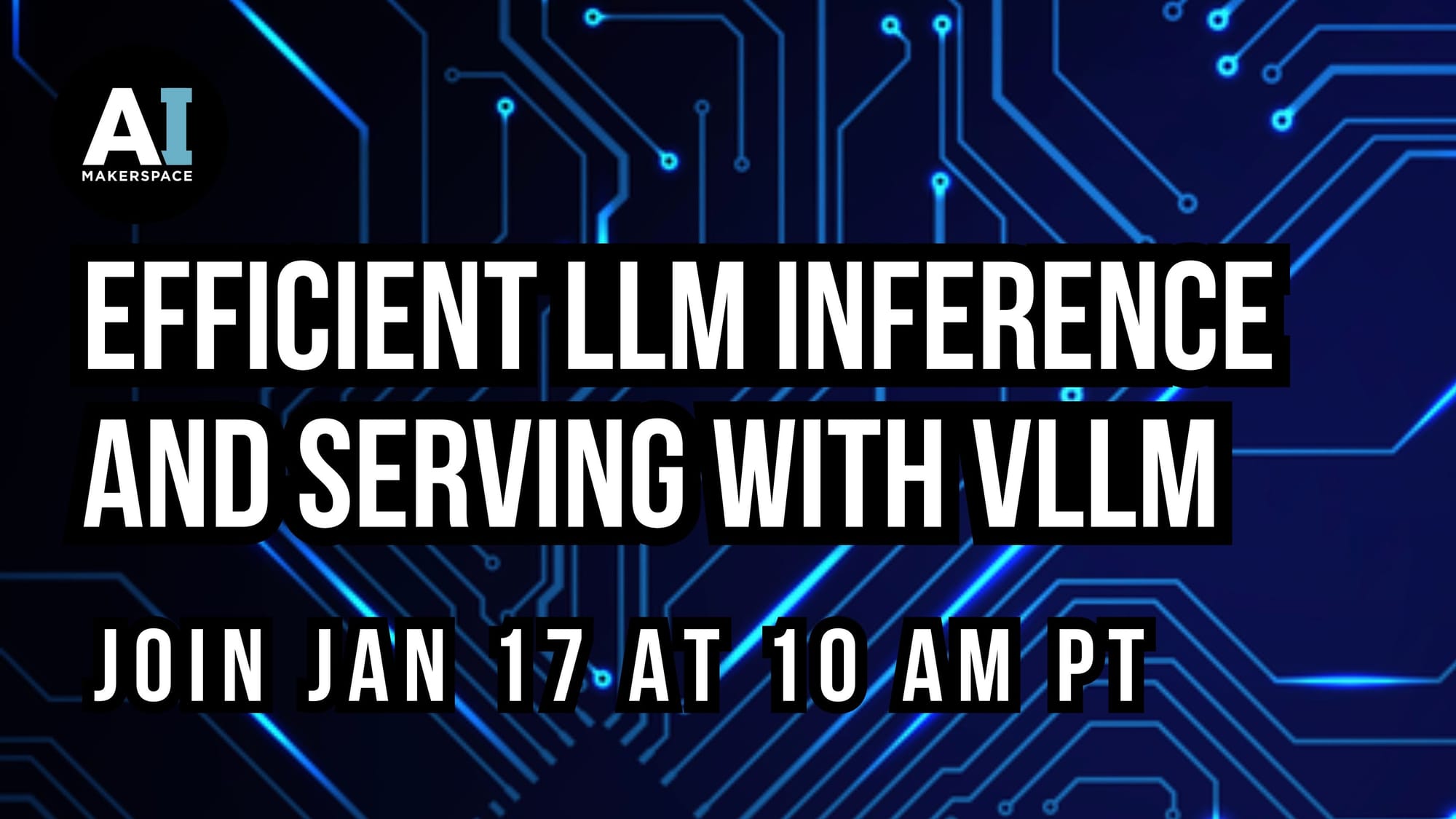

Efficient LLM Inference and Serving with vLLM

Come and learn the basics of serving and inference of Large Language Models and how vLLM relieves memory bottlenecks that limit serving performance at scale!

LLMs are Large. This makes them hard to load, fine-tune, and thus, to serve and perform inference on. We’ve talked recently about quantization and LoRA/qLoRA, techniques that help us to load and fine-tune models, and here we’ll break down vLLM, an up-and-coming open-source LLM inference and serving engine!

In this event, we’ll kick off by breaking down the basic intuition of inference and serving before diving into the details of vLLM, the library that promises easy, fast, and cheap LLM serving.

Then, we’ll dig into the “secret sauce of vLLM, the PagedAttention algorithm, including its inspiration, intuition, and the details of how it helps to efficiently manage the key and value tensors (the “KV cache”) during linear dot production self-attention calculations as input sequences flow through transformer attention blocks.

After we go high-level, we’ll cover the system components of vLLM and allow it to process requests and generate outputs, and of course, we'll dive into a live demo with code! Finally, we’ll discuss what we expect from vLLM next and where we think it should fit into your AI Engineering workflow in 2024!

Join us live to learn:

- The basics of serving and inference of Large Language Models

- How vLLM relieves memory bottlenecks that limit serving performance at scale

- The PagedAttention algorithm, and how it compares to popular Hugging Face libraries

Speakers:

- Dr. Greg Loughnane is the Founder & CEO of AI Makerspace, where he is an instructor for their LLM Ops: LLMs in Production courses. Since 2021 he has built and led industry-leading Machine Learning & AI boot camp programs. Previously, he worked as an AI product manager, a university professor teaching AI, an AI consultant and startup advisor, and ML researcher. He loves trail running and is based in Dayton, Ohio.

- Chris Alexiuk, is the Co-Founder & CTO at AI Makerspace, where he serves as an instructor for theirLLM Ops: LLMs in Production courses. A former Data Scientist, he also works as the Founding Machine Learning Engineer at Ox. As an experienced online instructor, curriculum developer, and YouTube creator, he’s always learning, building, shipping, and sharing his work! He loves Dungeons & Dragons and is based in Toronto, Canada.