There is no better time to reflect on a year gone by than when the holiday frenzy starts to wear off. Now that we've realized that 2023 has come to a close, we have also gained the perspective necessary to figure out just how momentous this past year was for artificial intelligence. Although 2023 may have been lacking in the viral releases that characterized 2022, it more than made up for it by being chock-full of advances, refinements, debates, and applications showcasing the realm of possibilities that was blown wide open precisely by those groundbreaking achievements of the previous years.

Thus, we've collected a selection of the stories we covered during 2023 that we believe will serve as a launching pad for future innovations. The list is by no means exhaustive, but hopefully, it is representative of the diversity of events that took the world by storm this past year.

Large Language Models: text-to-text generation and text-based applications

- GPT-4: Perhaps one of the year's most noteworthy events was OpenAI's follow-up of its ChatGPT 2022 release with the launch of GPT-4, among several other impressive advances. With its March 2023 announcement, GPT-4 contributed to setting the tone of the conversation surrounding LLMs. By the end of the year, OpenAI held DevDay, the first edition of its developer conference, where it announced the availability of GPT-4 Turbo, higher rate limits, and the open-source availability of the latest versions of the Whisper engine and the Consistency Decoder.

- Claude 2.1: It is no secret that Anthropic is looking to beat OpenAI at its own game. After all, the year's end left us with some juicy gossip: Anthropic optimistically updated its annualized revenue projections for 2024, leaving room for speculation on whether the updated projections had something to do with Sam Altman's departure from OpenAI. If the rumors are true, and OpenAI customers did turn to Anthropic after Altman's firing, looking for either backup or a reliable alternative, this would be a strong indicator of the public perception of Claude 2.1 as a worthy competitor for GPT-4.

Finances and speculation aside, Anthropic is pushing forward at a modest but steady pace: Claude 2.1 still boasts an industry-leading context window, the web-based experience at claude.ai recently gained international availability, and the company remains committed to delivering products that prioritize usefulness and safety over novelty. - Google Bard/PaLM 2: May 2023 witnessed the release of Google's PaLM 2, a model trained on multilingual text, scientific literature including mathematical expressions, and publicly available source code. Up to the release of the natively multimodal Gemini, PaLM-2 powered "the most capable Bard" of 2023. At its inception, Bard was powered by LaMDA, one of the first breakthroughs that followed the April 2023 merging of the DeepMind and Google Brain teams into Google DeepMind.

PaLM-2 boosted Bard's multilanguage support and powered the assistant's ability to integrate within the Google ecosystem, allowing the assistant to perform simple actions such as pulling dates from Calendar, finding relevant YouTube videos, and retrieving files from Drive. - LlaMA 2: Meta's publicly available family of models can easily be seen as one of the catalysts driving the growth of the open-source LLM ecosystem during 2023. A model search at Hugging Face for "llama" currently returns over 15,000 results. The first iteration of the LlaMA family was released in February, followed by the LlaMA 2 family, which was released in partnership with Microsoft and included the fine-tuned LlaMA Chat and CodeLlaMa in July.

- Mistral-7B and Mixtral: French startup Mistral AI emerged with a mission to develop "open-weight models that are on par with proprietary solutions." The startup released Mistral-7B in September, immediately becoming the best 7B-parameter open pre-trained model. Then, a little less than a month ago, in its characteristically informal fashion, Mistral released Mixtral 8x7B, a mixture-of-experts model matching or outperforming LlaMA-2-70B and GPT-3.5 in most benchmarks and tasks while being only a fraction of their size.

- Other noteworthy developments include:

- The trajectory from Bing Chat to Microsoft Copilot, powered by OpenAI's models and featuring plugins that extend its functionalities, such as the Suno plugin that enables Microsoft Copilot to create songs complete with lyrics, instrumentals, and voice. Copilot is now available in some Windows 11 installations and as an Android and iOS mobile application.

- The United Arab Emirates' Technology Innovation Institute released the most powerful (and sizeable) open-source model to date, the Falcon-180B.

- After releasing the unremarkable Titan, Amazon is rumored to be training a GPT-4 competitor, the 2T-parameter model Olympus.

- Elon Musk supposedly attempted to take on OpenAI by founding x.AI, the company behind Grok, a witty and sarcastic chatbot meant to answer 'spicy' questions, currently available via early access for X Premium+ subscribers.

Multimodal foundational models: text-to-image and video generation, image-to-text

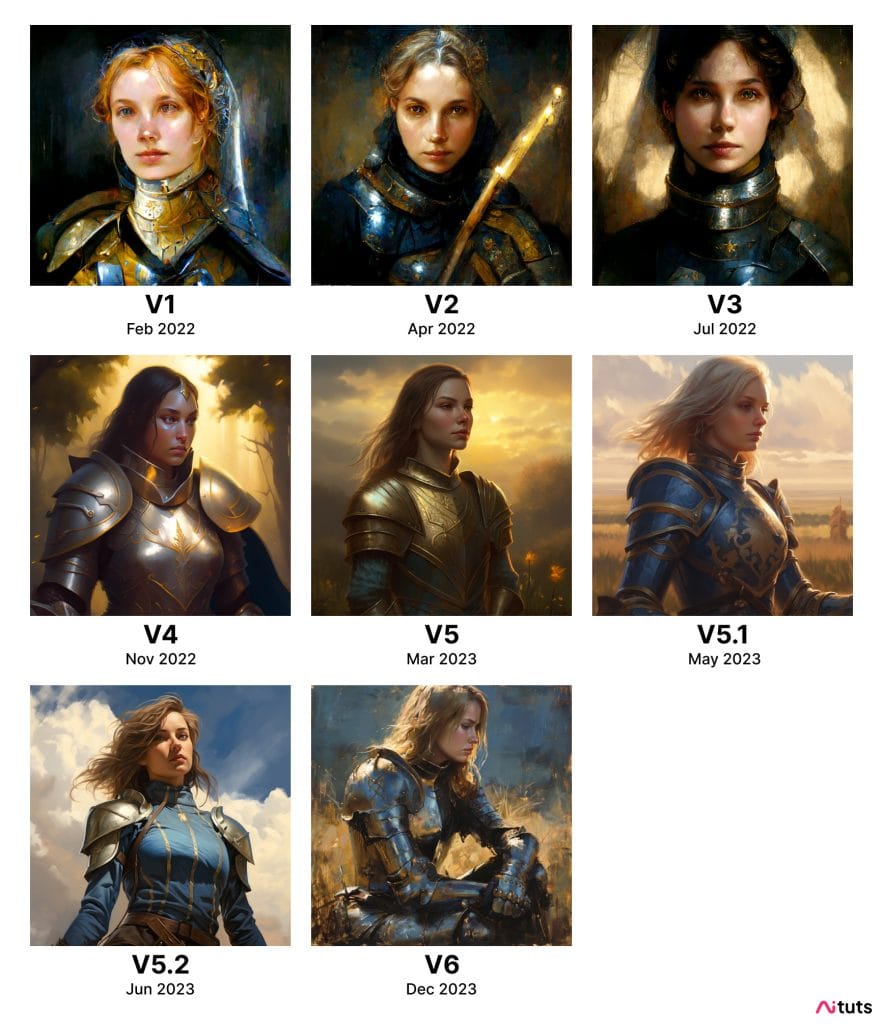

Multimodality proved to be the next important frontier in generative AI, with image generation being the subject of several impressive advancements when compared to previous years, as exemplified, for instance, by Midjourney's progress spanning less than two years:

- OpenAI held fast to its dominance with the release of DALL-E 3. This is the first iteration to integrate with ChatGPT and to address safety, privacy, and copyright concerns with measures such as a form that allows artists to opt their work out of training.

- Google and Apple are trying to get ahead by developing natively multimodal LLMs that differ from standard construction that piece an LLM together with image or audio encoders, depending on the goal of the model. Thus, rather than training independent components separately, natively multimodal models can be trained on several data types in a single round, thus cutting costs and training time. Google publicly announced the release of its new foundational model Gemini and its plans to power both Bard and a Pixel device using different presentations of the model. Text-based prompting for Bard with Gemini Pro became available for English-speaking users this December, but, likely, both Bard with Gemini Pro and Bard Advanced with Gemini Ultra will showcase the full extent of their capabilities, including multilingual support, during the first half of 2024.

On the other hand, Apple and Columbia University's release of their multimodal Ferret went under the radar until it was rediscovered a few months after its launch. - Stability AI released Stable Diffusion XL and SDXL Turbo, two open text-to-image generation models. Canva is harnessing Stability AI's image-generation technology to provide its customers with media-generation tools.

- Generative AI will likely keep pushing the boundaries of video and audio generation. Stable Video Diffusion made the news as Stability AI's open-source foundational model for video generation, as did Runway, the startup focused on empowering artists and creators with its content editing and generation tools, including Gen-2, its latest text-to-video generation model. Stability AI completed its multimedia product offerings with Stable Audio, while ElevenLabs and Respeecher delved into AI voice generation and responsible voice cloning, respectively.

- Image and video editing software and standalone tools are becoming increasingly common. Runway's AI Magic Tools are a great example of this, as are Adobe Firefly, Skylum's Luminar Neo, and Capsule. An interesting turn for AI-powered media editing is its application in advertising, with Amazon and Google launching tools for on-brand advertising media creation.

AI-assisted research and development

Google DeepMind's GNoME and AlphaFold made the news for their breakthrough predictions of the structure of crystals and biological molecules, respectively. These two tools have in common that they were developed to help solve two problems with cumbersome and expensive trial-and-error solutions. Together with Microsoft's EvoDiff, these trailblazing models have revealed a brand new field of application for AI. Models and neural networks excel at finding solutions to problems that would otherwise be tackled using a costly and time-consuming trial-and-error approach.

The idea that AI can unlock new insights and predictions or even provide solutions for some of these pesky problems is gaining traction, with several startups getting into the business of optimizing fields such as materials science or engineering. Other startups, research centers, and universities place their bets on accelerating medical diagnostics and imaging.

Partnerships

- Microsoft invested heavily in OpenAI, with both companies starting their year by announcing their extended partnership through a multi-year, multi-billion dollar investment. This arrangement could trigger a merger investigation under EU law due to possible competition issues. UK and US antitrust watchdogs have expressed similar concerns.

- Anthropic scrambled to level up against OpenAI by partnering with Amazon and earning financial backing from Google. The startup recently recalculated its estimated 2024 annual revenue and is reportedly in talks to close a $750-million deal with Menlo Ventures.

- NVIDIA and Microsoft announced a collaboration to provide a platform for enterprises worldwide to build, deploy, and manage AI applications by integrating the NVIDIA AI Enterprise suite into the Azure Machine Learning platform.

- Meta, Scaleway, and Hugging Face teamed up to launch the AI Startup Program at STATION F in Paris. The program will support five startups in the acceleration phase so they can develop projects based on open foundation models or incorporate such models into their current product offerings.

- Anyscale and Meta teamed up to accelerate the development of the LlaMA 2 ecosystem. One of the main goals of the partnership consists of framing the access to and fine-tuning of the LlaMA family of models via Anyscale Endpoints as an attractive, cost-efficient source of LLM inference for several applications.

- Instock Inc. is a startup developing automated storage and retrieval systems (ASRS) composed of a modular racking system and autonomous mobile robots that drive inside the racking to collect bins and transport them to ground-level working stations. The startup has received a $3.2 million investment from the Amazon Industrial Fund.

Debates and regulations

One of the biggest AI debates of 2023 concerned compliance with privacy and data protection policies and the lack of proactive regulation in the industry. The European Union worked hard to become the top player and most notorious candidate for the world's AI legislation enforcer. Following copyright concerns and corresponding lawsuits, OpenAI and Anthropic have introduced expanded legal protection to customers facing copyright infringement claims. An unresolved debate that will likely carry over to 2024 is the potential ineligibility of AI-generated media for protection under copyright law, especially after the US Copyright Office has ruled that AI-generated content cannot be copyrighted on more than one occasion.

Comments